Tuesday, January 31, 2023

New – AWS CloudTrail Lake Supports Ingesting Activity Events From Non-AWS Sources

In November 2013, we announced AWS CloudTrail to track user activity and API usage. AWS CloudTrail enables auditing, security monitoring, and operational troubleshooting. CloudTrail records user activity and API calls across AWS services as events. CloudTrail events help you answer the questions of “who did what, where, and when?”.

Recently we have improved the ability for you to simplify your auditing and security analysis by using AWS CloudTrail Lake. CloudTrail Lake is a managed data lake for capturing, storing, accessing, and analyzing user and API activity on AWS for audit, security, and operational purposes. You can aggregate and immutably store your activity events, and run SQL-based queries for search and analysis.

We have heard your feedback that aggregating activity information from diverse applications across hybrid environments is complex and costly, but important for a comprehensive picture of your organization’s security and compliance posture.

Today we are announcing support of ingestion for activity events from non-AWS sources using CloudTrail Lake, making it a single location of immutable user and API activity events for auditing and security investigations. Now you can consolidate, immutably store, search, and analyze activity events from AWS and non-AWS sources, such as in-house or SaaS applications, in one place.

Using the new PutAuditEvents API in CloudTrail Lake, you can centralize user activity information from disparate sources into CloudTrail Lake, enabling you to analyze, troubleshoot and diagnose issues using this data. CloudTrail Lake records all events in standardized schema, making it easier for users to consume this information to comprehensively and quickly respond to security incidents or audit requests.

CloudTrail Lake is also integrated with selected AWS Partners, such as Cloud Storage Security, Clumio, CrowdStrike, CyberArk, GitHub, Kong Inc, LaunchDarkly, MontyCloud, Netskope, Nordcloud, Okta, One Identity, Shoreline.io, Snyk, and Wiz, allowing you to easily enable audit logging through the CloudTrail console.

Getting Started to Integrate External Sources

You can start to ingest activity events from your own data sources or partner applications by choosing Integrations under the Lake menu in the AWS CloudTrail console.

To create a new integration, choose Add integration and enter your channel name. You can choose the partner application source from which you want to get events. If you’re integrating with events from your own applications hosted on-premises or in the cloud, choose My custom integration.

For Event delivery location, you can choose destinations for your events from this integration. This allows your application or partners to deliver events to your event data store of CloudTrail Lake. An event data store can retain your activity events for a week to up to seven years. Then you can run queries on the event data store.

Choose either Use existing event data stores or Create new event data store—to receive events from integrations. To learn more about event data store, see Create an event data store in the AWS documentation.

You can also set up the permissions policy for the channel resource created with this integration. The information required for the policy is dependent on the integration type of each partner applications.

There are two types of integrations: direct and solution. With direct integrations, the partner calls the PutAuditEvents API to deliver events to the event data store for your AWS account. In this case, you need to provide External ID, the unique account identifier provided by the partner. You can see a link to partner website for the step-by-step guide. With solution integrations, the application runs in your AWS account and the application calls the PutAuditEvents API to deliver events to the event data store for your AWS account.

To find the Integration type for your partner, choose the Available sources tab from the integrations page.

After creating an integration, you will need to provide this Channel ARN to the source or partner application. Until these steps are finished, the status will remain as incomplete. Once CloudTrail Lake starts receiving events for the integrated partner or application, the status field will be updated to reflect the current state.

To ingest your application’s activity events into your integration, call the PutAuditEvents API to add the payload of events. Be sure that there is no sensitive or personally identifying information in the event payload before ingesting it into CloudTrail Lake.

You can make a JSON array of event objects, which includes a required user-generated ID from the event, the required payload of the event as the value of EventData, and an optional checksum to help validate the integrity of the event after ingestion into CloudTrail Lake.

{

"AuditEvents": [

{

"Id": "event_ID",

"EventData": "{event_payload}", "EventDataChecksum": "optional_checksum",

},

... ]

}The following example shows how to use the put-audit-events AWS CLI command.

$ aws cloudtrail-data put-audit-events \

--channel-arn $ChannelArn \

--external-id $UniqueExternalIDFromPartner \

--audit-events \

{

"Id": "87f22433-0f1f-4a85-9664-d50a3545baef",

"EventData":"{\"eventVersion\":\0.01\",\"eventSource\":\"MyCustomLog2\", ...\}",

},

{

"Id": "7e5966e7-a999-486d-b241-b33a1671aa74",

"EventData":"{\"eventVersion\":\0.02\",\"eventSource\":\"MyCustomLog1\", ...\}",

"EventDataChecksum":"848df986e7dd61f3eadb3ae278e61272xxxx",

}On the Editor tab in the CloudTrail Lake, write your own queries for a new integrated event data store to check delivered events.

You can make your own integration query, like getting all principals across AWS and external resources that have made API calls after a particular date:

SELECT userIdentity.principalId FROM $AWS_EVENT_DATA_STORE_ID

WHERE eventTime > '2022-09-24 00:00:00'

UNION ALL

SELECT eventData.userIdentity.principalId FROM $PARTNER_EVENT_DATA_STORE_ID

WHRERE eventData.eventTime > '2022-09-24 00:00:00'To learn more, see CloudTrail Lake event schema and sample queries to help you get started.

Launch Partners

You can see the list of our launch partners to support a CloudTrail Lake integration option in the Available applications tab. Here are blog posts and announcements from our partners who collaborated on this launch (some will be added in the next few days).

- Cloud Storage Security

- Clumio

- CrowdStrike

- CyberArk

- GitHub

- Kong Inc

- LaunchDarkly

- MontyCloud

- Netskope

- Nordcloud

- Okta

- One Identity

- Shoreline.io

- Snyk

- Wiz

Now Available

AWS CloudTrail Lake now supports ingesting activity events from external sources in all AWS Regions where CloudTrail Lake is available today. To learn more, see the AWS documentation and each partner’s getting started guides.

If you are interested in becoming an AWS CloudTrail Partner, you can contact your usual partner contacts.

– Channy

from AWS News Blog https://ift.tt/E0m7nZ1

via IFTTT

Monday, January 30, 2023

New – Deployment Pipelines Reference Architecture and Reference Implementations

Today, we are launching a new reference architecture and a set of reference implementations for enterprise-grade deployment pipelines. A deployment pipeline automates the building, testing, and deploying of applications or infrastructures into your AWS environments. When you deploy your workloads to the cloud, having deployment pipelines is key to gaining agility and lowering time to market.

When I talk with you at conferences or on social media, I frequently hear that our documentation and tutorials are good resources to get started with a new service or a new concept. However, when you want to scale your usage or when you have complex or enterprise-grade use cases, you often lack resources to dive deeper.

This is why we have created over the years hundreds of reference architectures based on real-life use cases and also the security reference architecture. Today, we are adding a new reference architecture to this collection.

We used the best practices and lessons learned at Amazon and with hundreds of customer projects to create this deployment pipeline reference architecture and implementations. They go well beyond the typical “Hello World” example: They document how to architect and how to implement complex deployment pipelines with multiple environments, multiple AWS accounts, multiple Regions, manual approval, automated testing, automated code analysis, etc. When you want to increase the speed at which you deliver software to your customers through DevOps and continuous delivery, this new reference architecture shows you how to combine AWS services to work together. They document the mandatory and optional components of the architecture.

Having an architecture document and diagram is great, but having an implementation is even better. Each pipeline type in the reference architecture has at least one reference implementation. One of the reference implementations uses an AWS Cloud Development Kit (AWS CDK) application to deploy the reference architecture on your accounts. It is a good starting point to study or customize the reference architecture to fit your specific requirements.

You will find this reference architecture and its implementations at https://pipelines.devops.aws.dev.

Let’s Deploy a Reference Implementation

The new deployment pipeline reference architecture demonstrates how to build a pipeline to deploy a Java containerized application and a database. It comes with two reference implementations. We are working on additional pipeline types to deploy Amazon EC2 AMIs, manage a fleet of accounts, and manage dynamic configuration for your applications.

The sample application is developed with SpringBoot. It runs on top of Corretto, the Amazon-provided distribution of the OpenJDK. The application is packaged with the CDK and is deployed on AWS Fargate. But the application is not important here; you can substitute your own application. The important parts are the infrastructure components and the pipeline to deploy an application. For this pipeline type, we provide two reference implementations. One deploys the application using Amazon CodeCatalyst, the new service that we announced at re:Invent 2022, and one uses AWS CodePipeline. This is the one I choose to deploy for this blog post.

The pipeline starts building the applications with AWS CodeBuild. It runs the unit tests and also runs Amazon CodeGuru to review code quality and security. Finally, it runs Trivy to detect additional security concerns, such as known vulnerabilities in the application dependencies. When the build is successful, the pipeline deploys the application in three environments: beta, gamma, and production. It deploys the application in the beta environment in a single Region. The pipeline runs end-to-end tests in the beta environment. All the tests must succeed before the deployment continues to the gamma environment. The gamma environment uses two Regions to host the application. After deployment in the gamma environment, the deployment into production is subject to manual approval. Finally, the pipeline deploys the application in the production environment in six Regions, with three waves of deployments made of two Regions each.

I need four AWS accounts to deploy this reference implementation: one to deploy the pipeline and tooling and one for each environment (beta, gamma, and production). At a high level, there are two deployment steps: first, I bootstrap the CDK for all four accounts, and then I create the pipeline itself in the toolchain account. You must plan for 2-3 hours of your time to prepare your accounts, create the pipeline, and go through a first deployment.

Once the pipeline is created, it builds, tests, and deploys the sample application from its source in AWS CodeCommit. You can commit and push changes to the application source code and see it going through the pipeline steps again.

My colleague Irshad Buch helped me try the pipeline on my account. He wrote a detailed README with step-by-step instructions to let you do the same on your side. The reference architecture that describes this implementation in detail is available on this new web page. The application source code, the AWS CDK scripts to deploy the application, and the AWS CDK scripts to create the pipeline itself are all available on AWS’s GitHub. Feel free to contribute, report issues or suggest improvements.

Available Now

The deployment pipeline reference architecture and its reference implementations are available today, free of charge. If you decide to deploy a reference implementation, we will charge you for the resources it creates on your accounts. You can use the provided AWS CDK code and the detailed instructions to deploy this pipeline on your AWS accounts. Try them today!

from AWS News Blog https://ift.tt/1q37LfY

via IFTTT

AWS Week in Review – January 30, 2023

This week’s review post comes to you from the road, having just wrapped up sponsorship of NDC London. While there we got to speak to many .NET developers, both new and experienced with AWS, and all eager to learn more. Thanks to everyone who stopped by our expo booth to chat or ask questions to the team!

Last Week’s Launches

My team will be back on the road to our next events soon, but first, here are just some launches that caught my attention while I was at the expo booth last week:

General availability of Porting Advisor for Graviton: AWS Graviton2 processors are custom designed, Arm64, processors, that deliver increased price performance over comparable x86-64 processors. They’re suitable for a wide range of compute workloads on Amazon Elastic Compute Cloud (Amazon EC2) including application servers, microservices, high-performance computing (HPC), CPU-based ML inference, gaming, any many more. They’re also available in other AWS services such as AWS Lambda, AWS Fargate, to name just a few. The new Porting Advisor for Graviton is a freely available, open-source command line tool for analyzing compatibility of applications you want to run on Graviton-based compute environments. It provides a report that highlights missing or outdated libraries, and code, that you may need to update in order to port your application to run on Graviton processors.

Runtime management controls for AWS Lambda: Automated feature updates, performance improvements, and security patches to runtime environments for Lambda functions is popular with many customers. However, some customers have asked for increased visibility into when these updates occur, and control over when they’re applied. The new runtime management controls for Lambda provide optional capabilities for those customers that require more control over runtime changes. The new controls are optional; by default, all your Lambda functions will continue to receive automatic updates. But, if you wish, you can now apply a runtime management configuration with your functions that specifies how you want updates to be applied. You can find full details on the new runtime management controls in this blog post on the AWS Compute Blog.

General availability of Amazon OpenSearch Serverless: OpenSearch Serverless was one of the livestream segments in the recent AWS on Air re:Invent Recap of previews that were announced at the conference last December. OpenSearch Serverless is now generally available. As a serverless option for Amazon OpenSearch Service, it removes the need to configure, manage, or scale OpenSearch clusters, offering automatic provisioning and scaling of resources to enable fast ingestion and query responses.

Additional connectors for Amazon AppFlow: At AWS re:Invent 2023, I blogged about a release of new data connectors enabling data transfer from a variety of Software-as-a-Service (SaaS) applications to Amazon AppFlow. An additional set of 10 connectors, enabling connectivity from Asana, Google Calendar, JDBC, PayPal, and more, are also now available. Check out the full list of additional connectors launched this past week in this What’s New post.

AWS open-source news and updates: As usual, there’s a new edition of the weekly open-source newsletter highlighting new open-source projects, tools, and demos from the AWS Community. Read edition #143 here – LINK TBD.

For a full list of AWS announcements, be sure to keep an eye on the What's New at AWS page.Upcoming AWS Events

Check your calendars and sign up for these AWS events:

AWS Innovate Data and AI/ML edition: AWS Innovate is a free online event to learn the latest from AWS experts and get step-by-step guidance on using AI/ML to drive fast, efficient, and measurable results.

- AWS Innovate Data and AI/ML edition for Asia Pacific and Japan is taking place on February 22, 2023. Register here.

- Registrations for AWS Innovate EMEA (March 9, 2023) and the Americas (March 14, 2023) will open soon. Check the AWS Innovate page for updates.

You can find details on all upcoming events, in-person or virtual, here.

And finally, if you’re a .NET developer, my team will be at Swetugg, in Sweden, February 8-9, and DeveloperWeek, Oakland, California, February 15-17. If you’re in the vicinity at these events, be sure to stop by and say hello!

That’s all for this week. Check back next Monday for another Week in Review!

This post is part of our Week in Review series. Check back each week for a quick roundup of interesting news and announcements from AWS!from AWS News Blog https://ift.tt/jgZW4Q5

via IFTTT

Saturday, January 28, 2023

Wednesday, January 25, 2023

Monday, January 23, 2023

Now Open — AWS Asia Pacific (Melbourne) Region in Australia

Following up on Jeff’s post on the announcement of the Melbourne Region, today I’m pleased to share the general availability of the AWS Asia Pacific (Melbourne) Region with three Availability Zones and API name ap-southeast-4.

The AWS Asia Pacific (Melbourne) Region is the second infrastructure Region in Australia, in addition to the Asia Pacific (Sydney) Region, and 12th the twelfth Region in Asia Pacific, joining existing Rregions in Singapore, Tokyo, Seoul, Mumbai, Hong Kong, Osaka, Jakarta, Hyderabad, Sydney, and Mainland China Beijing and Ningxia Regions.

Melbourne city historic building: Flinders Street Station built of yellow sandstone

AWS in Australia: Long-Standing History

In November 2012, AWS established a presence in Australia with the AWS Asia Pacific (Sydney) Region. Since then, AWS has provided continuous investments in infrastructure and technology to help drive digital transformations in Australia, to support hundreds of thousands of active customers each month.

Amazon CloudFront — Amazon CloudFront is a content delivery network (CDN) service built for high performance, security, and developer convenience that was first launched in Australia alongside Asia Pacific (Sydney) Region in 2012. To further accelerate the delivery of static and dynamic web content to end users in Australia, AWS announced additional CloudFront locations for Sydney and Melbourne in 2014. In addition, AWS also announced a Regional Edge Cache in 2016 and an additional CloudFront point of presence (PoP) in Perth in 2018. CloudFront points of presence ensure popular content can be served quickly to your viewers. Regional Edge Caches are positioned (network-wise) between the CloudFront locations and the origin and further help to improve content performance. AWS currently has seven edge locations and one Regional Edge Cache location in Australia.

AWS Direct Connect — As with CloudFront, the first AWS Direct Connect location was made available with Asia Pacific (Sydney) Region launch in 2012. To continue helping our customers in Australia improve application performance, secure data, and reduce networking costs, AWS announced the opening of additional Direct Connect locations in Sydney (2014), Melbourne (2016), Canberra (2017), Perth (2017), and an additional location in Sydney (2022), totaling six locations.

AWS Local Zones — To help customers run applications that require single-digit millisecond latency or local data processing, customers can use AWS Local Zones. They bring AWS infrastructure (compute, storage, database, and other select AWS services) closer to end users and business centers. AWS customers can run workloads with low latency requirements on the AWS Local Zones location in Perth while seamlessly connecting to the rest of their workloads running in AWS Regions.

Upskilling Local Developers, Students, and Future IT Leaders

Digital transformation will not happen on its own. AWS runs various programs and has trained more than 200,000 people across Australia with cloud skills since 2017. There is an additional goal to train more than 29 million people globally with free cloud skills by 2025. Here’s a brief description of related programs from AWS:

- AWS re/Start is a digital skills training program that prepares unemployed, underemployed, and transitioning individuals for careers in cloud computing and connects students to potential employers.

- AWS Academy provides higher education institutions with a free, ready-to-teach cloud computing curriculum that prepares students to pursue industry-recognized certifications and in-demand cloud jobs.

- AWS Educate provides students with access to AWS services. AWS is also collaborating with governments, educators, and the industry to help individuals, both tech and nontech workers, build and deepen their digital skills to nurture a workforce that can harness the power of cloud computing and advanced technologies.

- AWS Industry Quest is a game-based training initiative designed to help professionals and teams learn and build vital cloud skills and solutions. At re:Invent 2022, AWS announced the first iteration of the program for the financial services sector. National Australia Bank (NAB) is AWS Industry Quest: Financial Services’ first beta customer globally. Through AWS Industry Quest, NAB has trained thousands of colleagues in cloud skills since 2018, resulting in more than 4,500 industry-recognized certifications.

In addition to the above programs, AWS is also committed to supporting Victoria’s local tech community through digital upskilling, community initiatives, and partnerships. The Victorian Digital Skills is a new program from the Victorian Government that helps create a new pipeline of talent to meet the digital skills needs of Victorian employers. AWS has taken steps to help solve the retraining challenge by supporting the Victorian Digital Skills Program, which enables mid-career Victorians to reskill on technology and gain access to higher-paying jobs.

The Climate Pledge

Amazon is committed to investing and innovating across its businesses to help create a more sustainable future. With The Climate Pledge, Amazon is committed to reaching net-zero carbon across its business by 2040 and is on a path to powering its operations with 100 percent renewable energy by 2025.

As of May 2022, two projects in Australia are operational. Amazon Solar Farm Australia – Gunnedah and Amazon Solar Farm Australia – Suntop will aim to generate 392,000 MWh of renewable energy each year, equal to the annual electricity consumption of 63,000 Australian homes. Once Amazon Wind Farm Australia – Hawkesdale also becomes operational, it will boost the projects’ combined yearly renewable energy generation to 717,000 MWh, or enough for nearly 115,000 Australian homes.

AWS Customers in Australia

We have customers in Australia that are doing incredible things with AWS, for example:

National Australia Bank Limited (NAB)

NAB is one of Australia’s largest banks and Australia’s largest business bank. “We have been exploring the potential use cases with AWS since the announcement of the AWS Asia Pacific (Melbourne) Region,” said Steve Day, Chief Technology Officer at NAB.

Locating key banking applications and critical workloads geographically close to their compute platform and the bulk of their corporate workforce will provide lower latency benefits. Moreover, it will simplify their disaster recovery plans. With AWS Asia Pacific (Melbourne) Region, it will also accelerate their strategy to move 80 percent of applications to the cloud by 2025.

Littlepay

This Melbourne-based financial technology company works with more than 250 transport and mobility providers to enable contactless payments on local buses, city networks, and national public transport systems.

“Our mission is to create a universal payment experience around the world, which requires world-class global infrastructure that can grow with us,” said Amin Shayan, CEO at Littlepay. “To drive a seamless experience for our customers, we ingest and process over 1 million monthly transactions in real time using AWS, which enables us to generate insights that help us improve our services. We are excited about the launch of a second AWS Region in Australia, as it gives us access to advanced technologies, like machine learning and artificial intelligence, at a lower latency to help make commuting a simpler and more enjoyable experience.”

Royal Melbourne Institute of Technology (RMIT)

RMIT is a global university of technology, design, and enterprise with more than 91,000 students and 11,000 staff around the world.

“Today’s launch of the AWS Region in Melbourne will open up new ways for our researchers to drive computational engineering and maximize the scientific return,” said Professor Calum Drummond, Deputy Vice-Chancellor and Vice-President, Research and Innovation, and Interim DVC, STEM College, at RMIT.

“We recently launched RMIT University’s AWS Cloud Supercomputing facility (RACE) for RMIT researchers, who are now using it to power advances into battery technologies, photonics, and geospatial science. The low latency and high throughput delivered by the new AWS Region in Melbourne, combined with our 400 Gbps-capable private fiber network, will drive new ways of innovation and collaboration yet to be discovered. We fundamentally believe RACE will help truly democratize high-performance computing capabilities for researchers to run their datasets and make faster discoveries.”

Australian Bureau of Statistics (ABS)

ABS holds the Census of Population and Housing every five years. It is the most comprehensive snapshot of Australia, collecting data from around 10 million households and more than 25 million people.

“In this day and age, people expect a fast and simple online experience when using government services,” said Bindi Kindermann, program manager for 2021 Census Field Operations at ABS. “Using AWS, the ABS was able to scale and securely deliver services to people across the country, making it possible for them to quickly and easily participate in this nationwide event.”

With the success of the 2021 Census, the ABS is continuing to expand its use of AWS into broader areas of its business, making use of the security, reliability, and scalability of the cloud.

You can find more inspiring stories from our customers in Australia by visiting Customer Success Stories page.

Things to Know

AWS User Groups in Australia — Australia is also home to 9 AWS Heroes, 43 AWS Community Builders and community members of 17 AWS User Groups in various cities in Australia. Find an AWS User Group near you to meet and collaborate with fellow developers, participate in community activities and share your AWS knowledge.

AWS Global Footprint — With this launch, AWS now spans 99 Availability Zones within 31 geographic Regions around the world. We have also announced plans for 12 more Availability Zones and 4 more AWS Regions in Canada, Israel, New Zealand, and Thailand.

Available Now — The new Asia Pacific (Melbourne) Region is ready to support your business, and you can find a detailed list of the services available in this Region on the AWS Regional Services List.

To learn more, please visit the Global Infrastructure page, and start building on ap-southeast-4!

Happy building!

— Donnie

from AWS News Blog https://ift.tt/Izn0NHr

via IFTTT

AWS Week in Review – January 23, 2023

Welcome to my first AWS Week in Review of 2023. As usual, it has been a busy week, so let’s dive right in:

Last Week’s Launches

Here are some launches that caught my eye last week:

Amazon Connect – You can now deliver long lasting, persistent chat experiences for your customers, with the ability to resume previous conversations including context, metadata, and transcripts. Learn more.

Amazon RDS for MariaDB – You can now enforce the use of encrypted (SSL/TLS) connections to your databases instances that are running Amazon RDS for MariaDB. Learn more.

Amazon CloudWatch – You can now use Metric Streams to send metrics across AWS accounts on a continuous, near real-time basis, within a single AWS Region. Learn more.

AWS Serverless Application Model – You can now run CloudFormation Linter from the SAM CLI to validate your SAM templates. The default rules check template size, Fn:GetAtt parameters, Fn:If syntax, and more. Learn more.

EC2 Auto Scaling – You can now see (and take advantage of) recommendations for activating a predictive scaling policy to optimize the capacity of your Auto Scaling groups. Recommendations can make use of up to 8 weeks of past date; learn more.

Service Limit Increases – Service limits for several AWS services were raised, and other services now have additional quotas that can be raised upon request:

- Amazon S3 File Gateway – Up to 50 file shares per gateway

- AWS Fault Injection Simulator (FIS) – Quota adjustment and higher resource quotas

- Amazon EMR Serverless – New vCPU-based quota

- Amazon Chime SDK – Up to 250 webcam video streams

- Amazon Elastic File System (Amazon EFS) – Up to 1,000 access points per file system

X In Y – Existing AWS services became available in additional regions:

- Amazon FSx for Lustre in Middle East (UAE)

- Amazon FSx for Windows File Server in Middle East (UAE)

- X2idn and X2iden Instances in US West (N. California)

- AWS Shield Advanced in Middle East (UAE)

- Amazon Elastic File System in Europe (Spain)

- AWS CodeBuild in Middle East (UAE)

- AWS Elemental MediaTailor in Africa (Cape Town), Asia Pacific (Mumbai), and US East (Ohio)

- AWS Managed Services (AMS) in Africa (Cape Town)

Other AWS News

Here are some other news items and blog posts that may be of interest to you:

AWS Open Source News and Updates – My colleague Ricardo Sueiras highlights the latest open source projects, tools, and demos from the open source community around AWS. Read edition #142 here.

AWS Fundamentals – This new book is designed to teach you about AWS in a real-world context. It covers the fundamental AWS services (compute, database, networking, and so forth), and helps you to make use of Infrastructure as Code using AWS CloudFormation, CDK, and Serverless Framework. As an add-on purchase you can also get access to a set of gorgeous, high-resolution infographics.

Upcoming AWS Events

Check your calendars and sign up for these AWS events:

AWS on Air – Every Friday at Noon PT we discuss the latest news and go in-depth on several of the most recent launches. Learn more.

#BuildOnLive – Build On AWS Live events are a series of technical streams on twitch.tv/aws that focus on technology topics related to challenges hands-on practitioners face today:

- Join the Build On Live Weekly show about the cloud, the community, the code, and everything in between, hosted by AWS Developer Advocates. The show streams every Thursday at 9:00 PT on twitch.tv/aws.

- Join the new The Big Dev Theory show, co-hosted with AWS partners, discussing various topics such as data and AI, AIOps, integration, and security. The show streams every Tuesday at 8:00 PT on twitch.tv/aws.

Check the AWS Twitch schedule for all shows.

AWS Community Days – AWS Community Day events are community-led conferences that deliver a peer-to-peer learning experience, providing developers with a venue to acquire AWS knowledge in their preferred way: from one another.

- In January, the AWS community will host in-person events in Singapore (January 28) and in Tel Aviv, Israel (January 30).

AWS Innovate Data and AI/ML edition – AWS Innovate is a free online event to learn the latest from AWS experts and get step-by-step guidance on using AI/ML to drive fast, efficient, and measurable results.

- AWS Innovate Data and AI/ML edition for Asia Pacific and Japan is taking place on February 22, 2023. Register here.

- Registrations for AWS Innovate EMEA (March 9, 2023) and the Americas (March 14, 2023) will open soon. Check the AWS Innovate page for updates.

You can browse all upcoming in-person and virtual events.

And that’s all for this week!

— Jeff;

from AWS News Blog https://ift.tt/hU58CaK

via IFTTT

Wednesday, January 18, 2023

Tuesday, January 17, 2023

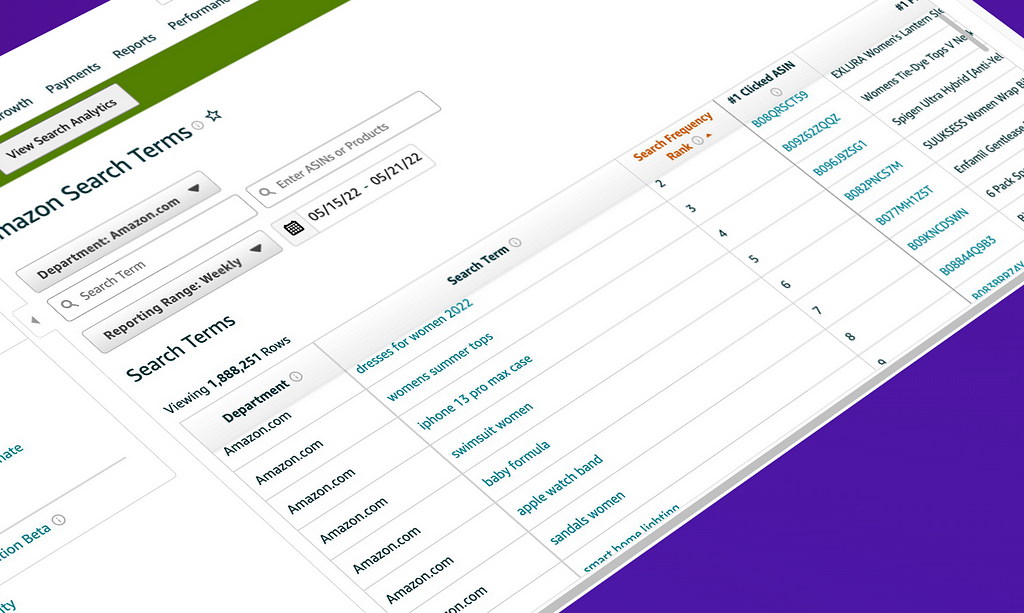

Openbridge vs. Saras Analytics Daton

Comparing Openbridge alternative Saras Analytics for Amazon data automation

This evaluation aims to compare the features and capabilities of Openbridge and Saras Analytics, also known as Daton, and determine which product best fits Amazon Seller, Vendor, Advertiser, and Agency needs.

Monday, January 16, 2023

AWS Week in Review – January 16, 2023

Today, we celebrate Martin Luther King Jr. Day in the US to honor the late civil rights leader’s life, legacy, and achievements. In this article, Amazon employees share what MLK Day means to them and how diversity makes us stronger.

Coming back to our AWS Week in Review—it’s been a busy week!

Last Week’s Launches

Here are some launches that got my attention during the previous week:

AWS Local Zones in Perth and Santiago now generally available – AWS Local Zones help you run latency-sensitive applications closer to end users. AWS now has a total of 29 Local Zones; 12 outside of the US (Bangkok, Buenos Aires, Copenhagen, Delhi, Hamburg, Helsinki, Kolkata, Muscat, Perth, Santiago, Taipei, and Warsaw) and 17 in the US. See the full list of available and announced AWS Local Zones and learn how to get started.

AWS Clean Rooms now available in preview – During AWS re:Invent this past November, we announced AWS Clean Rooms, a new analytics service that helps companies across industries easily and securely analyze and collaborate on their combined datasets—without sharing or revealing underlying data. You can now start using AWS Clean Rooms (Preview).

Amazon Kendra updates – Amazon Kendra is an intelligent search service powered by machine learning (ML) that helps you search across different content repositories with built-in connectors. With the new Amazon Kendra Intelligent Ranking for self-managed OpenSearch, you can now improve the quality of your OpenSearch search results using Amazon Kendra’s ML-powered semantic ranking technology.

Amazon Kendra also released an Amazon S3 connector with VPC support to index and search documents from Amazon S3 hosted in your VPC, a new Google Drive Connector to index and search documents from Google Drive, a Microsoft Teams Connector to enable Microsoft Teams messaging search, and a Microsoft Exchange Connector to enable email-messaging search.

Amazon Personalize updates – Amazon Personalize helps you improve customer engagement through personalized product and content recommendations. Using the new Trending-Now recipe, you can now generate recommendations for items that are rapidly becoming more popular with your users. Amazon Personalize now also supports tag-based resource authorization. Tags are labels in the form of key-value pairs that can be attached to individual Amazon Personalize resources to manage resources or allocate costs.

Amazon SageMaker Canvas now delivers up to 3x faster ML model training time – SageMaker Canvas is a visual interface that enables business analysts to generate accurate ML predictions on their own—without having to write a single line of code. The accelerated model training times help you prototype and experiment more rapidly, shortening the time to generate predictions and turn data into valuable insights.

For a full list of AWS announcements, be sure to keep an eye on the What's New at AWS page.Other AWS News

Here are some additional news items and blog posts that you may find interesting:

AWS open-source news and updates – My colleague Ricardo writes this weekly open-source newsletter in which he highlights new open-source projects, tools, and demos from the AWS Community. Read edition #141 here.

ML model hosting best practices in Amazon SageMaker – This seven-part blog series discusses best practices for ML model hosting in SageMaker to help you identify which hosting design pattern meets your needs best. The blog series also covers advanced concepts such as multi-model endpoints (MME), multi-container endpoints (MCE), serial inference pipelines, and model ensembles. Read part one here.

I would also like to recommend this really interesting Amazon Science article about differential privacy for end-to-end speech recognition. The data used to train AI models is protected by differential privacy (DP), which adds noise during training. In this article, Amazon researchers show how ensembles of teacher models can meet DP constraints while reducing error by more than 26 percent relative to standard DP methods.

Upcoming AWS Events

Check your calendars and sign up for these AWS events:

#BuildOnLive – Build On AWS Live events are a series of technical streams on twitch.tv/aws that focus on technology topics related to challenges hands-on practitioners face today.

- Join the Build On Live Weekly show about the cloud, the community, the code, and everything in between, hosted by AWS Developer Advocates. The show streams every Thursday at 09:00 US PT on twitch.tv/aws.

- Join the new The Big Dev Theory show, co-hosted with AWS partners, discussing various topics such as data and AI, AIOps, integration, and security. The show streams every Tuesday at 08:00 US PT on twitch.tv/aws.

Check the AWS Twitch schedule for all shows.

AWS Community Days – AWS Community Day events are community-led conferences that deliver a peer-to-peer learning experience, providing developers with a venue to acquire AWS knowledge in their preferred way: from one another.

- In January, the AWS community will host in-person events in Singapore (January 28) and in Tel Aviv, Israel (January 30).

AWS Innovate Data and AI/ML edition – AWS Innovate is a free online event to learn the latest from AWS experts and get step-by-step guidance on using AI/ML to drive fast, efficient, and measurable results.

- AWS Innovate Data and AI/ML edition for Asia Pacific and Japan is taking place on February 22, 2023. Register here.

- Registrations for AWS Innovate EMEA (March 9, 2023) and the Americas (March 14, 2023) will open soon. Check the AWS Innovate page for updates.

You can browse all upcoming in-person and virtual events.

That’s all for this week. Check back next Monday for another Week in Review!

— Antje

This post is part of our Week in Review series. Check back each week for a quick roundup of interesting news and announcements from AWS!from AWS News Blog https://ift.tt/DB02Ytc

via IFTTT

Wednesday, January 11, 2023

Monday, January 9, 2023

Friday, January 6, 2023

Thursday, January 5, 2023

Amazon S3 Encrypts New Objects By Default

At AWS, security is job zero. Starting today, Amazon Simple Storage Service (Amazon S3) encrypts all new objects by default. Now, S3 automatically applies server-side encryption (SSE-S3) for each new object, unless you specify a different encryption option. SSE-S3 was first launched in 2011. As Jeff wrote at the time: “Amazon S3 server-side encryption handles all encryption, decryption, and key management in a totally transparent fashion. When you PUT an object, we generate a unique key, encrypt your data with the key, and then encrypt the key with a [root] key.”

This change puts another security best practice into effect automatically—with no impact on performance and no action required on your side. S3 buckets that do not use default encryption will now automatically apply SSE-S3 as the default setting. Existing buckets currently using S3 default encryption will not change.

As always, you can choose to encrypt your objects using one of the three encryption options we provide: S3 default encryption (SSE-S3, the new default), customer-provided encryption keys (SSE-C), or AWS Key Management Service keys (SSE-KMS). To have an additional layer of encryption, you might also encrypt objects on the client side, using client libraries such as the Amazon S3 encryption client.

While it was simple to enable, the opt-in nature of SSE-S3 meant that you had to be certain that it was always configured on new buckets and verify that it remained configured properly over time. For organizations that require all their objects to remain encrypted at rest with SSE-S3, this update helps meet their encryption compliance requirements without any additional tools or client configuration changes.

With today’s announcement, we have now made it “zero click” for you to apply this base level of encryption on every S3 bucket.

Verify Your Objects Are Encrypted

The change is visible today in AWS CloudTrail data event logs. You will see the changes in the S3 section of the AWS Management Console, Amazon S3 Inventory, Amazon S3 Storage Lens, and as an additional header in the AWS CLI and in the AWS SDKs over the next few weeks. We will update this blog post and documentation when the encryption status is available in these tools in all AWS Regions.

To verify the change is effective on your buckets today, you can configure CloudTrail to log data events. By default, trails do not log data events, and there is an extra cost to enable it. Data events show the resource operations performed on or within a resource, such as when a user uploads a file to an S3 bucket. You can log data events for Amazon S3 buckets, AWS Lambda functions, Amazon DynamoDB tables, or a combination of those.

Once enabled, search for PutObject API for file uploads or InitiateMultipartUpload for multipart uploads. When Amazon S3 automatically encrypts an object using the default encryption settings, the log includes the following field as the name-value pair: "SSEApplied":"Default_SSE_S3". Here is an example of a CloudTrail log (with data event logging enabled) when I uploaded a file to one of my buckets using the AWS CLI command aws s3 cp backup.sh s3://private-sst.

Amazon S3 Encryption Options

As I wrote earlier, SSE-S3 is now the new base level of encryption when no other encryption-type is specified. SSE-S3 uses Advanced Encryption Standard (AES) encryption with 256-bit keys managed by AWS.

You can choose to encrypt your objects using SSE-C or SSE-KMS rather than with SSE-S3, either as “one click” default encryption settings on the bucket, or for individual objects in PUT requests.

SSE-C lets Amazon S3 perform the encryption and decryption of your objects while you retain control of the keys used to encrypt objects. With SSE-C, you don’t need to implement or use a client-side library to perform the encryption and decryption of objects you store in Amazon S3, but you do need to manage the keys that you send to Amazon S3 to encrypt and decrypt objects.

With SSE-KMS, AWS Key Management Service (AWS KMS) manages your encryption keys. Using AWS KMS to manage your keys provides several additional benefits. With AWS KMS, there are separate permissions for the use of the KMS key, providing an additional layer of control as well as protection against unauthorized access to your objects stored in Amazon S3. AWS KMS provides an audit trail so you can see who used your key to access which object and when, as well as view failed attempts to access data from users without permission to decrypt the data.

When using an encryption client library, such as the Amazon S3 encryption client, you retain control of the keys and complete the encryption and decryption of objects client-side using an encryption library of your choice. You encrypt the objects before they are sent to Amazon S3 for storage. The Java, .Net, Ruby, PHP, Go, and C++ AWS SDKs support client-side encryption.

You can follow the instructions in this blog post if you want to retroactively encrypt existing objects in your buckets.

Available Now

This change is effective now, in all AWS Regions, including on AWS GovCloud (US) and AWS China Regions. There is no additional cost for default object-level encryption.

from AWS News Blog https://ift.tt/x5Z6fWI

via IFTTT