Friday, December 29, 2023

Thursday, December 21, 2023

Your MySQL 5.7 and PostgreSQL 11 databases will be automatically enrolled into Amazon RDS Extended Support

Today, we are announcing that your MySQL 5.7 and PostgreSQL 11 database instances running on Amazon Aurora and Amazon Relational Database Service (Amazon RDS) will be automatically enrolled into Amazon RDS Extended Support starting on February 29, 2024.

This will help avoid unplanned downtime and compatibility issues that can arise with automatically upgrading to a new major version. This provides you with more control over when you want to upgrade the major version of your database.

This automatic enrollment may mean that you will experience higher charges when RDS Extended Support begins. You can avoid these charges by upgrading your database to a newer DB version before the start of RDS Extended Support.

What is Amazon RDS Extended Support?

In September 2023, we announced Amazon RDS Extended Support, which allows you to continue running your database on a major engine version past its RDS end of standard support date on Amazon Aurora or Amazon RDS at an additional cost.

Until community end of life (EoL), the MySQL and PostgreSQL open source communities manage common vulnerabilities and exposures (CVE) identification, patch generation, and bug fixes for the respective engines. The communities release a new minor version every quarter containing these security patches and bug fixes until the database major version reaches community end of life. After the community end of life date, CVE patches or bug fixes are no longer available and the community considers those engines unsupported. For example, MySQL 5.7 and PostgreSQL 11 are no longer supported by the communities as of October and November 2023 respectively. We are grateful to the communities for their continued support of these major versions and a transparent process and timeline for transitioning to the newest major version.

With RDS Extended Support, Amazon Aurora and RDS takes on engineering the critical CVE patches and bug fixes for up to three years beyond a major version’s community EoL. For those 3 years, Amazon Aurora and RDS will work to identify CVEs and bugs in the engine, generate patches and release them to you as quickly as possible. Under RDS Extended Support, we will continue to offer support, such that the open source community’s end of support for an engine’s major version does not leave your applications exposed to critical security vulnerabilities or unresolved bugs.

You might wonder why we are charging for RDS Extended Support rather than providing it as part of the RDS service. It’s because the engineering work for maintaining security and functionality of community EoL engines requires AWS to invest developer resources for critical CVE patches and bug fixes. This is why RDS Extended Support is only charging customers who need the additional flexibility to stay on a version past community EoL.

RDS Extended Support may be useful to help you meet your business requirements for your applications if you have particular dependencies on a specific MySQL or PostgreSQL major version, such as compatibility with certain plugins or custom features. If you are currently running on-premises database servers or self-managed Amazon Elastic Compute Cloud (Amazon EC2) instances, you can migrate to Amazon Aurora MySQL-Compatible Edition, Amazon Aurora PostgreSQL-Compatible Edition, Amazon RDS for MySQL, Amazon RDS for PostgreSQL beyond the community EoL date, and continue to use these versions these versions with RDS Extended Support while benefiting from a managed service. If you need to migrate many databases, you can also utilize RDS Extended Support to split your migration into phases, ensuring a smooth transition without overwhelming IT resources.

In 2024, RDS Extended Support will be available for RDS for MySQL major versions 5.7 and higher, RDS for PostgreSQL major versions 11 and higher, Aurora MySQL-compatible version 2 and higher, and Aurora PostgreSQL-compatible version 11 and higher. For a list of all future supported versions, see Supported MySQL major versions on Amazon RDS and Amazon Aurora major versions in the AWS documentation.

| Community major version | RDS/Aurora version | Community end of life date | End of RDS standard support date | Start of RDS Extended Support pricing | End of RDS Extended Support |

| MySQL 5.7 | RDS for MySQL 5.7 | October 2023 | February 29, 2024 | March 1, 2024 | February 28, 2027 |

| Aurora MySQL 2 | October 31, 2024 | December 1, 2024 | |||

| PostgreSQL 11 | RDS for PostgreSQL 11 | November 2023 | March 31, 2024 | April 1, 2024 | March 31, 2027 |

| Aurora PostgreSQL 11 | February 29, 2024 |

RDS Extended Support is priced per vCPU per hour. Learn more about pricing details and timelines for RDS Extended Support at Amazon Aurora pricing, RDS for MySQL pricing, and RDS for PostgreSQL pricing. For more information, see the blog posts about Amazon RDS Extended Support for MySQL and PostgreSQL databases in the AWS Database Blog.

Why are we automatically enrolling all databases to Amazon RDS Extended Support?

We had originally informed you that RDS Extended Support would provide the opt-in APIs and console features in December 2023. In that announcement, we said that if you decided not to opt your database in to RDS Extended Support, it would automatically upgrade to a newer engine version starting on March 1, 2024. For example, you would be upgraded from Aurora MySQL 2 or RDS for MySQL 5.7 to Aurora MySQL 3 or RDS for MySQL 8.0 and from Aurora PostgreSQL 11 or RDS for PostgreSQL 11 to Aurora PostgreSQL 15 and RDS for PostgreSQL 15, respectively.

However, we heard lots of feedback from customers that these automatic upgrades may cause their applications to experience breaking changes and other unpredictable behavior between major versions of community DB engines. For example, an unplanned major version upgrade could introduce compatibility issues or downtime if applications are not ready for MySQL 8.0 or PostgreSQL 15.

Automatic enrollment in RDS Extended Support gives you additional time and more control to organize, plan, and test your database upgrades on your own timeline, providing you flexibility on when to transition to new major versions while continuing to receive critical security and bug fixes from AWS.

If you’re worried about increased costs due to automatic enrollment in RDS Extended Support, you can avoid RDS Extended Support and associated charges by upgrading before the end of RDS standard support.

How to upgrade your database to avoid RDS Extended Support charges

Although RDS Extended Support helps you schedule your upgrade on your own timeline, sticking with older versions indefinitely means missing out on the best price-performance for your database workload and incurring additional costs from RDS Extended Support.

MySQL 8.0 on Aurora MySQL, also known as Aurora MySQL 3, unlocks support for popular Aurora features, such as Global Database, Amazon RDS Proxy, Performance Insights, Parallel Query, and Serverless v2 deployments. Upgrading to RDS for MySQL 8.0 provides features including up to three times higher performance versus MySQL 5.7, such as Multi-AZ cluster deployments, Optimized Reads, Optimized Writes, and support for AWS Graviton2 and Graviton3-based instances.

PostgreSQL 15 on Aurora PostgreSQL supports the Aurora I/O Optimized configuration, Aurora Serverless v2, Babelfish for Aurora PostgreSQL, pgvector extension, Trusted Language Extensions for PostgreSQL (TLE), and AWS Graviton3-based instances as well as community enhancements. Upgrading to RDS for PostgreSQL 15 provides features such as Multi-AZ DB cluster deployments, RDS Optimized Reads, HypoPG extension, pgvector extension, TLEs for PostgreSQL, and AWS Graviton3-based instances.

Major version upgrades may make database changes that are not backward-compatible with existing applications. You should manually modify your database instance to upgrade to the major version. It is strongly recommended that you thoroughly test any major version upgrade on non-production instances before applying it to production to ensure compatibility with your applications. For more information about an in-place upgrade from MySQL 5.7 to 8.0, see the incompatibilities between the two versions, Aurora MySQL in-place major version upgrade, and RDS for MySQL upgrades in the AWS documentation. For the in-place upgrade from PostgreSQL 11 to 15, you can use the pg_upgrade method.

To minimize downtime during upgrades, we recommend using Fully Managed Blue/Green Deployments in Amazon Aurora and Amazon RDS. With just a few steps, you can use Amazon RDS Blue/Green Deployments to create a separate, synchronized, fully managed staging environment that mirrors the production environment. This involves launching a parallel green environment with upper version replicas of your production databases lower version. After validating the green environment, you can shift traffic over to it. Then, the blue environment can be decommissioned. To learn more, see Blue/Green Deployments for Aurora MySQL and Aurora PostgreSQL or Blue/Green Deployments for RDS for MySQL and RDS for PostgreSQL in the AWS documentation. In most cases, Blue/Green Deployments are the best option to reduce downtime, except for limited cases in Amazon Aurora or Amazon RDS.

For more information on performing a major version upgrade in each DB engine, see the following guides in the AWS documentation.

- Upgrading the MySQL DB engine for Amazon RDS

- Upgrading the PostgreSQL DB engine for Amazon RDS

- Upgrading the Amazon Aurora MySQL DB cluster

- Upgrading Amazon Aurora PostgreSQL DB clusters

Now available

Amazon RDS Extended Support is now available for all customers running Amazon Aurora and Amazon RDS instances using MySQL 5.7, PostgreSQL 11, and higher major versions in AWS Regions, including the AWS GovCloud (US) Regions beyond the end of the standard support date in 2024. You don’t need to opt in to RDS Extended Support, and you get the flexibility to upgrade your databases and continued support for up to 3 years.

Learn more about RDS Extended Support in the Amazon Aurora User Guide and the Amazon RDS User Guide. For pricing details and timelines for RDS Extended Support, see Amazon Aurora pricing, RDS for MySQL pricing, and RDS for PostgreSQL pricing.

Please send feedback to AWS re:Post for Amazon RDS and Amazon Aurora or through your usual AWS Support contacts.

— Channy

from AWS News Blog https://ift.tt/QsROhAa

via IFTTT

Wednesday, December 20, 2023

DNS over HTTPS is now available in Amazon Route 53 Resolver

Starting today, Amazon Route 53 Resolver supports using the DNS over HTTPS (DoH) protocol for both inbound and outbound Resolver endpoints. As the name suggests, DoH supports HTTP or HTTP/2 over TLS to encrypt the data exchanged for Domain Name System (DNS) resolutions.

Using TLS encryption, DoH increases privacy and security by preventing eavesdropping and manipulation of DNS data as it is exchanged between a DoH client and the DoH-based DNS resolver.

This helps you implement a zero-trust architecture where no actor, system, network, or service operating outside or within your security perimeter is trusted and all network traffic is encrypted. Using DoH also helps follow recommendations such as those described in this memorandum of the US Office of Management and Budget (OMB).

DNS over HTTPS support in Amazon Route 53 Resolver

You can use Amazon Route 53 Resolver to resolve DNS queries in hybrid cloud environments. For example, it allows AWS services access for DNS requests from anywhere within your hybrid network. To do so, you can set up inbound and outbound Resolver endpoints:

- Inbound Resolver endpoints allow DNS queries to your VPC from your on-premises network or another VPC.

- Outbound Resolver endpoints allow DNS queries from your VPC to your on-premises network or another VPC.

After you configure the Resolver endpoints, you can set up rules that specify the name of the domains for which you want to forward DNS queries from your VPC to an on-premises DNS resolver (outbound) and from on-premises to your VPC (inbound).

Now, when you create or update an inbound or outbound Resolver endpoint, you can specify which protocols to use:

- DNS over port 53 (Do53), which is using either UDP or TCP to send the packets.

- DNS over HTTPS (DoH), which is using TLS to encrypt the data.

- Both, depending on which one is used by the DNS client.

- For FIPS compliance, there is a specific implementation (DoH-FIPS) for inbound endpoints.

Let’s see how this works in practice.

Using DNS over HTTPS with Amazon Route 53 Resolver

In the Route 53 console, I choose Inbound endpoints from the Resolver section of the navigation pane. There, I choose Create inbound endpoint.

I enter a name for the endpoint, select the VPC, the security group, and the endpoint type (IPv4, IPv6, or dual-stack). To allow using both encrypted and unencrypted DNS resolutions, I select Do53, DoH, and DoH-FIPS in the Protocols for this endpoint option.

After that, I configure the IP addresses for DNS queries. I select two Availability Zones and, for each, a subnet. For this setup, I use the option to have the IP addresses automatically selected from those available in the subnet.

After I complete the creation of the inbound endpoint, I configure the DNS server in my network to forward requests for the amazonaws.com domain (used by AWS service endpoints) to the inbound endpoint IP addresses.

Similarly, I create an outbound Resolver endpoint and and select both Do53 and DoH as protocols. Then, I create forwarding rules that tell for which domains the outbound Resolver endpoint should forward requests to the DNS servers in my network.

Now, when the DNS clients in my hybrid environment use DNS over HTTPS in their requests, DNS resolutions are encrypted. Optionally, I can enforce encryption and select only DoH in the configuration of inbound and outbound endpoints.

Things to know

DNS over HTTPS support for Amazon Route 53 Resolver is available today in all AWS Regions where Route 53 Resolver is offered, including GovCloud Regions and Regions based in China.

DNS over port 53 continues to be the default for inbound or outbound Resolver endpoints. In this way, you don’t need to update your existing automation tooling unless you want to adopt DNS over HTTPS.

There is no additional cost for using DNS over HTTPS with Resolver endpoints. For more information, see Route 53 pricing.

— Danilo

from AWS News Blog https://ift.tt/6UyHLb0

via IFTTT

The AWS Canada West (Calgary) Region is now available

Today, we are opening a new Region in Canada. AWS Canada West (Calgary), also known as ca-west-1, is the thirty-third AWS Region. It consists of three Availability Zones, for a new total of 105 Availability Zones globally.

This second Canadian Region allows you to architect multi-Region infrastructures that meet five nines of availability while keeping your data in the country.

A global footprint

Our approach to building infrastructure is fundamentally different from other providers. At the core of our global infrastructure is a Region. An AWS Region is a physical location in the world where we have multiple Availability Zones. Availability Zones consist of one or more discrete data centers, each with redundant power, networking, and connectivity, housed in separate facilities. Unlike with other cloud providers, who often define a region as a single data center, having multiple Availability Zones allows you to operate production applications and databases that are more highly available, fault tolerant, and scalable than would be possible from a single data center.

AWS has more than 17 years of experience building its global infrastructure. And there’s no compression algorithm for experience, especially when it comes to scale, security, and performance.

Canadian customers of every size, including global brands like BlackBerry, CI Financial, Keyera, KOHO, Maple Leaf Sports & Entertainment (MLSE), Nutrien, Sun Life, TELUS, and startups like Good Chemistry and Cohere, and public sector organizations like the University of Calgary and Natural Resources Canada (NRCan), are already running workloads on AWS. They choose AWS for its security, performance, flexibility, and global presence.

AWS Global Infrastructure, including AWS Local Zones and AWS Outposts, gives our customers the flexibility to deploy workloads close to their customers to minimize network latency. For example, one customer that has benefited from AWS flexibility is Canadian decarbonization technology scale-up, BrainBox AI. BrainBox AI uses cloud-based artificial intelligence (AI) and machine learning (ML) on AWS to help building owners around the world reduce HVAC emissions by up to 40 percent and energy consumption by up to 25 percent. The AWS Global Infrastructure allows their solution to manage with low latency hundreds of buildings in over 20 countries, 24-7.

Services available

You can deploy your workloads on any of the C5, M5, M5d, R5, C6g, C6gn, C6i, C6id, M6g, M6gd, M6i, M6id, R6d, R6i, R6id, I4i, I3en, T3, and T4g instance families. The new AWS Canada West (Calgary) has 65 AWS services available at launch. Here is the list, sorted by alphabetical order: Amazon API Gateway, AWS AppConfig, AWS Application Auto Scaling, Amazon Aurora, Aurora PostgreSQL, AWS Batch, AWS Certificate Manager, AWS CloudFormation, Amazon CloudFront, AWS Cloud Map, AWS CloudTrail, Amazon CloudWatch, Amazon CloudWatch Events, Amazon CloudWatch Logs, AWS CodeDeploy, AWS Config, AWS Database Migration Service (AWS DMS), AWS DataSync, AWS Direct Connect, Amazon DynamoDB, Amazon ElastiCache, Amazon Elastic Block Store (Amazon EBS), Amazon Elastic Compute Cloud (Amazon EC2), Amazon EC2 Auto Scaling, Amazon Elastic Container Registry (Amazon ECR), Amazon Elastic Container Service (Amazon ECS), Amazon Elastic Kubernetes Service (Amazon EKS), Elastic Load Balancing, Elastic Load Balancing – Gateway (GWLB), Elastic Load Balancing – Network (NLB), Amazon EMR, Amazon EventBridge, AWS Fargate, AWS Health Dashboard, AWS Identity and Access Management (IAM), Amazon Kinesis Data Firehose, Amazon Kinesis Data Streams, AWS Key Management Service (AWS KMS), AWS Lambda, AWS Management Console, AWS Marketplace, Amazon OpenSearch Service, AWS Organizations, Amazon Redshift, Amazon Relational Database Service (Amazon RDS), AWS Resource Access Manager, Resource Groups, Amazon Route 53, AWS Secrets Manager, AWS Security Hub, AWS Security Token Service, Service Quotas, AWS Shield Standard, Amazon Simple Notification Service (Amazon SNS), Amazon Simple Queue Service (Amazon SQS), Amazon Simple Storage Service (Amazon S3), Amazon Simple Workflow Service (Amazon SWF), AWS Site-to-Site VPN, AWS Step Functions, AWS Support API, AWS Systems Manager, AWS Trusted Advisor, Amazon Virtual Private Cloud (Amazon VPC), VM Import/Export, and AWS X-Ray.

AWS in Canada

We have been supporting our customers and partners with infrastructure in Canada since December 2016, when the first Canadian AWS Region, AWS Canada (Central), was launched. In the same year, we launched Amazon CloudFront locations in Toronto and Montreal to better serve your customers in the region. To date, there are ten CloudFront points of presence (PoPs) in Canada: five in Toronto, four in Montreal, and one in Vancouver. We also have engineering teams located in multiple cities in the country.

From 2016–2021, AWS has invested over 2.57 billion CAD (1.9 billion USD) in Canada and plans to invest up to 24.8 billion CAD (18.3 billion USD) by 2037 in the two Regions. Using the input-output methodology and statistical tables provided by Statistics Canada, we estimate that the planned investment will add 43.02 billion CAD (31 billion USD) to the gross domestic product (GDP) of Canada and support more than 9,300 full-time equivalent (FTE) jobs in the Canadian economy.

In addition to providing our customers with world-class infrastructure benefits, Amazon is committed to reaching net zero carbon across its business by 2040 and is on a path to powering its operations with 100 percent renewable energy by 2025. In 2022, 90 percent of the electricity consumed by Amazon was attributable to renewable energy sources. Additionally, AWS has a goal to be water positive by 2030, returning more water to communities than it uses in its direct operations. Amazon has a total of four renewable energy projects in Canada: three south of Calgary and one close to Edmonton. According to BloombergNEF, Amazon is the largest corporate purchaser of renewable energy in the country (and the world). These projects generate more than 2.3 million megawatt hours (MWH) of clean energy–enough to power 1.69 million Canadian homes.

Education is one of our top priorities as well. Since 2017, we have trained more than 200,000 Canadians on cloud computing skills through free and paid AWS Training and Certification programs. Learners of various skill levels, roles, and backgrounds can build knowledge and practical skills with more than 600 free online courses in up to 14 languages on AWS Skills Builder. Amazon is committed to providing 29 million people around the world with free cloud computing skills training by 2025.

Security

Customers around the world trust AWS to keep their data safe, and keeping their workloads secure and confidential is foundational to how we operate. Since the inception of AWS, we have relentlessly innovated on security, privacy tools, and practices to meet, and even exceed, our customers’ expectations.

For example, you decide where to store your data and who can access it. Services such as AWS CloudTrail allow you to verify how and when data are accessed. Our virtualization technology, AWS Nitro System, has been designed to restrict any operator access to customer data. This means no person, or even service, from AWS can access data when it is being used in an EC2 instance. NCC Group, a leading cybersecurity consulting firm based in the United Kingdom, audited the Nitro architecture and affirmed our claims.

Our core infrastructure is built to satisfy the security requirements of the military, global banks, and other high-sensitivity organizations.

In Canada, Neo Financial is a financial tech startup that uses the elasticity of the AWS Cloud to scale its business. They chose AWS in 2019 because we helped them to meet their regulatory requirements. They use EC2 for their core infrastructure, S3 for highly durable storage, Amazon GuardDuty to improve their security posture, and CloudFront to improve performance for their customers.

Performance

The AWS Global Infrastructure is built for performance, offering the lowest latency, lowest packet loss, and highest overall network quality. This is achieved with a fully redundant 400 GbE fiber network backbone, often providing many terabits of capacity between Regions.

To help provide Canadian customers with even lower latency, we have announced two AWS Local Zones in Toronto and Vancouver.

Performance is specially important when you are streaming your favorite TV show. Calgary-based Kidoodle.TV offers a streaming service for children. They have more than 100 million app downloads worldwide and more than 1 billion ad seconds for sale every 2 days. Using AWS, Kidoodle.TV was able to build the same service architecture that multibillion-dollar companies can deploy, which allowed them to seamlessly scale up from 400,000 monthly active users to 12 million in a year.

Additional things to know

We preannounced 12 additional Availability Zones in four future Regions in Malaysia, New Zealand, Thailand, and the AWS European Sovereign Cloud. We will be happy to share more information on these Regions so, stay tuned.

I can’t wait to discover how you will innovate and what amazing services you will deploy on this new AWS Region. Go build and deploy your infrastructure on ca-west-1 today.

Aujourd’hui, nous inaugurons une nouvelle Région Amazon Web Services (AWS) au Canada. La Région AWS Canada Ouest (Calgary), également connue sous le nom ca‑west‑1, est la 33e Région AWS. Elle compte trois Zones de disponibilité, emmenant ainsi le total des Zones de disponibilité à travers le monde à 105.

Cette deuxième Région au Canada vous permet d’élaborer des infrastructures multi-Régions qui demeurent disponibles 99,999 % du temps, tout en conservant vos données à l’intérieur des frontières canadiennes.

Une empreinte mondiale

Notre approche en matière de développement de notre infrastructure est fondamentalement différente de celle adoptée par d’autres fournisseurs. Au cœur de notre infrastructure mondiale, vous trouvez des Régions. Une Région AWS est un lieu physique dans le monde, dans lequel nous avons plusieurs Zones de disponibilité. Les Zones de disponibilité sont formées d’un ou plusieurs centres de données distincts, chacun doté de systèmes d’alimentation, de réseau et de connectivité redondants, et hébergés dans des installations séparées. Contrairement aux autres fournisseurs infonuagiques, qui définissent souvent une région comme étant un centre de données unique, le fait de pouvoir compter sur plusieurs Zones de disponibilité vous permet d’exploiter des applications et des bases de données de production ayant une plus grande disponibilité, une meilleure tolérance aux pannes et une plus importante évolutivité, allant ainsi au-delà des possibilités offertes par un centre de données unique.

AWS compte plus de 17 années d’expérience dans la mise en œuvre de son infrastructure mondiale. Il n’existe pas d’algorithme de compression pour remplacer une telle expérience, surtout lorsqu’il est question d’évolutivité, de sécurité et de performances.

Des clients canadiens de toute taille, dont des marques mondiales telles que BlackBerry, CI Financial, Keyera, KOHO, Maple Leaf Sports & Entertainment (MLSE), Nutrien, Sun Life et TELUS, ainsi que de jeunes pousses comme Good Chemistry and Cohere, en plus d’organismes du secteur public telles que l’Université de Calgary et Ressources naturelles Canada (RNCan), exécutent déjà des charges de travail sur AWS. Ces entreprises et organismes ont choisi AWS pour la sécurité, les performances, la flexibilité et la présence mondiale que nous offrons.

L’infrastructure mondiale AWS, dont font partie les Zones locales AWS et les AWS Outposts, offre à nos clients la flexibilité de déployer leurs charges de travail à proximité de leur clientèle, minimisant ainsi la latence du réseau. Par exemple, un de nos clients qui bénéfice de la flexibilité d’AWS est BrainBox AI, une jeune entreprise en croissance qui élabore des technologies de décarbonation. BrainBox AI utilise l’intelligence artificielle (IA) et l’apprentissage automatique (AA) basés dans le Nuage AWS pour aider des propriétaires d’édifice, partout au monde, à réduire les émissions liées aux systèmes de chauffage, de ventilation et de climatisation jusqu’à 40 %, et la consommation énergétique jusqu’à 25 %. L’infrastructure mondiale AWS permet à leur solution de gérer, avec une latence faible, des centaines d’immeubles dans plus de 20 pays, et ce 24 heures sur 24, sept jours sur sept.

Services disponibles

Vous pouvez déployer vos charges de travail sur n’importe laquelle des familles d’instance C5, M5, M5d, R5, C6g, C6gn, C6i, C6id, M6g, M6gd, M6i, M6id, R6d, R6i, R6id, I4i, I3en, T3 et T4g. La nouvelle Région Canada Ouest (Calgary) compte 65 services AWS, tous disponibles dès le lancement. En voici la liste, en ordre alphabétique : Amazon API Gateway, AWS AppConfig, AWS Application Auto Scaling, Amazon Aurora, Aurora PostgreSQL, AWS Batch, AWS Certificate Manager, AWS CloudFormation, Amazon CloudFront, AWS Cloud Map, AWS CloudTrail, Amazon CloudWatch, Amazon CloudWatch Events, Amazon CloudWatch Logs, AWS CodeDeploy, AWS Config, AWS Database Migration Service (AWS DMS), AWS DataSync, AWS Direct Connect, Amazon DynamoDB, Amazon Elastic Block Store (Amazon EBS), Amazon Elastic Compute Cloud (Amazon EC2), Amazon EC2 Auto Scaling, Amazon Elastic Container Registry (Amazon ECR), Amazon Elastic Container Service (Amazon ECS), Amazon Elastic Kubernetes Service (Amazon EKS ), , Elastic Load Balancing, , Elastic Load Balancing – Gateway (GWLB), Amazon EMR, Amazon EventBridge, AWS Fargate, AWS Health Dashboard, AWS Identity and Access Management (IAM), Amazon Kinesis Data Streams, AWS Key Management Service (AWS KMS), AWS Lambda, AWS Management Console, AWS Marketplace, Amazon OpenSearch Service, AWS Organizations, Amazon Redshift, AWS Resource Access Manager, Resource Groups, Amazon Route 53, AWS Secrets Manager, AWS Security Hub, AWS Security Token Service, Service Quotas, AWS Shield Standard, Amazon Simple Notification Service (Amazon SNS), Amazon Simple Queue Service (Amazon SQS), Amazon Simple Storage Service (Amazon S3), Amazon Simple Workflow Service (Amazon SWF), AWS Site-to-Site VPN, AWS Step Functions, AWS Support API, AWS Systems Manager, AWS Trusted Advisor, VM Import/Export et AWS X-Ray.

AWS au Canada

Nous soutenons nos clients et partenaires grâce à notre infrastructure canadienne depuis décembre 2016, lorsque la première Région AWS au Canada, soit la Région AWS Canada (Centre), a été inaugurée. Au cours de cette même année, nous avons lancé des emplacements Amazon CloudFront à Toronto et Montréal afin de mieux servir vos clients dans ces régions. Actuellement, nous comptons 10 points de présence (PdP) au Canada : cinq à Toronto, quatre à Montréal et un à Vancouver. Nous avons également des équipes d’ingénieurs basées dans plusieurs villes à travers le pays.

Entre 2016 et 2021, AWS a investi plus de 2,57 milliards $ CAD (1,9 milliards $ USD) au Canada et prévoit investir jusqu’à 24,8 milliards $ CAD (18,3 milliards $ USD) dans nos deux Régions d’ici 2037. En se basant sur la méthodologie entrée-sortie et les tableaux statistiques fournies par Statistique Canada, nous estimons que les investissements prévus ajouteront 43,02 milliards $ CAD (31 milliards USD) au produit intérieur brut (PIB) du Canada et soutiendront plus de 9 300 emplois équivalents temps plein (ETP) au sein de l’économie canadienne.

En plus d’offrir les avantages d’une infrastructure de classe mondiale à nos clients, Amazon s’est engagé à atteindre une empreinte carbone nette zéro pour l’ensemble de ses activités d’ici 2040, et est en voie d’alimenter l’ensemble de ses opérations avec des énergies 100 % renouvelables d’ici 2025. En 2022, 90 % de l’électricité consommée par Amazon provenait de sources d’énergie renouvelables. En outre, AWS s’est donné comme objectif d’avoir un bilan positif en matière d’eau d’ici 2030, restituant ainsi plus d’eau aux communautés que la quantité utilisée pour ses activités directes. Amazon compte quatre projets d’énergie renouvelable au Canada, soit trois situés au sud de Calgary et un autre près d’Edmonton. Selon BloombergNEF, Amazon est la plus grande entreprise acheteuse d’énergie renouvelable au pays (et au monde). Ces projets génèrent plus de 2,3 millions de mégawattheures (MWh) d’énergie propre, soit suffisamment pour alimenter 1,69 million de foyers canadiens.

La formation est également l’une de nos principales priorités. Depuis 2017, nous avons formé plus de 200 000 Canadiens et Canadiennes en compétences infonuagiques par le biais de programmes de formation et certification AWS gratuits et payants. Des apprenants ayant différents niveaux de compétences, de responsabilités et d’expérience peuvent acquérir des connaissances et des compétences pratiques grâce à AWS Skills Builder, qui offre plus de 600 cours en ligne gratuits en jusqu’à 14 langues. Amazon s’est engagé à offrir des formations gratuites en compétences infonuagiques à 29 millions de personnes à travers le monde d’ici 2025.

Sécurité

Des clients du monde entier font confiance à AWS pour assurer la sécurité de leurs données, alors que la sécurisation et la confidentialité de leurs charges de travail sont des éléments fondamentaux de notre mode de fonctionnement. Depuis les tous débuts d’AWS, nous innovons sans relâche en matière de sécurité, d’outils de protection de la vie privée et de pratiques afin de répondre aux attentes de nos clients, et même dépasser ces attentes.

Par exemple, les décisions concernant l’emplacement de stockage de vos données, et qui peut y accéder, vous appartiennent. Des services tels qu’AWS CloudTrail vous permettent de vérifier comment et quand les données sont consultées. Notre technologie de virtualisation, AWS Nitro System, a été conçue pour restreindre l’accès de tout opérateur aux données de la clientèle. Cela signifie qu’aucun membre du personnel d’AWS, ou même un service AWS, peut accéder aux données lorsqu’elles sont utilisées au sein d’une instance Amazon Elastic Compute Cloud (Amazon EC2). En effet, NCC Group, une des principales firmes de conseil en cybersécurité au Royaume‑Uni, a procédé à une vérification de notre architecture Nitro et a confirmé nos affirmations.

Notre infrastructure de base est conçue pour répondre aux exigences de sécurité des armées, des banques mondiales, ainsi que d’autres organisations traitant des informations hautement sensibles.

Basée au Canada, Neo est une jeune pousse spécialisée en technologie financière qui profite de l’élasticité du Nuage AWS pour développer ses activités. En 2019, l’entreprise a choisi AWS car nous l’avions aidée à répondre aux exigences réglementaires du secteur. Elle utilise Amazon Elastic Compute Cloud (Amazon EC2) pour son infrastructure de base, Amazon Simple Storage Service (Amazon S3) pour un stockage très durable, Amazon GuardDuty pour améliorer sa posture de sécurité, ainsi qu’Amazon CloudFront afin d’optimiser les performances de ses systèmes pour sa clientèle.

Performances

L’infrastructure mondiale AWS est conçue pour offrir les meilleures performances et la plus faible latence atteignable, minimiser la perte de paquets et fournir la meilleure qualité générale pour l’ensemble du réseau. Cela est rendu possible grâce à un réseau dorsal de fibre optique de 400 GbE entièrement redondant, permettant souvent plusieurs térabits de capacité entre les Régions.

Afin d’offrir une latence encore plus faible à nos clients canadiens, nous avons annoncé la mise en place de deux Zone locales AWS à Toronto et Vancouver.

Les performances sont davantage importantes lorsque vous visionnez la diffusion en continu de votre émission préférée. L’entreprise Kidoodle.TV, basée à Calgary, offre un service de diffusion en continu destiné aux enfants. Elle compte plus de 100 millions de téléchargements de son application à travers le monde et plus d’un milliard de secondes publicitaires à vendre par période de 48 heures. En utilisant AWS, Kidoodle.TV a pu mettre en place le même type d’architecture de service que les entreprises multimilliardaires sont en mesure de déployer. Cela a permis à l’entreprise de passer, en une année, de 400 000 à 1,2 million d’utilisateurs actifs mensuels.

Informations complémentaires

Nous avons annoncé 12 futures Zones de disponibilité dans quatre Régions additionnelles en Malaisie, en Nouvelle‑Zélande, en Thaïlande et la Région souveraine en Europe; nous aurons le plaisir de partager des informations supplémentaires le moment venu.

Je suis impatient de découvrir vos innovations ainsi que les extraordinaires services que vous allez mettre en œuvre au sein de la Région AWS Canada Ouest (Calgary). N’hésitez pas à développer et à déployer votre infrastructure sur ca‑west‑1 dès aujourd’hui.

from AWS News Blog https://ift.tt/5lgFyBv

via IFTTT

Tuesday, December 19, 2023

AWS India customers can now save card information for monthly AWS billing

Today, AWS India customers can now securely save their credit or debit cards in their AWS accounts according to the Reserve Bank of India (RBI) guidelines. Customers can use their saved cards to make payments for their AWS invoices.

Previously, customers needed to manually enter their card information in the payments console for each payment. Now they can save their cards in their accounts by providing consent, according to RBI guidelines. Customers can save their cards when they sign up for AWS, through the payment console by adding a card in payment preferences, or while making a payment for an invoice.

Getting started with saving your cards for billing

To get started, go to Payment preferences in the AWS Billing and Cost Management console. Choose Add Payment method to add debit or credit card payment.

Enable the Credit or debit card option and input the card details and billing address. You also need to provide consent by selecting the checkbox Save card information for faster future payments.

You will be redirected to your bank website to verify the card information. After authentication, AWS India will store the card token securely for future payments. You can also save the card information when signing up for AWS or paying an existing invoice.

To learn more, see Managing your payments in India in the AWS Billing documentation.

Now available

This feature is available now for all customers using debit and credit cards issued in India with AWS India as their seller of record. There is no impact on cards issued outside of India, and you can continue to save and use these cards as you do today.

You can choose whether to save your cards. However, we recommend that you do so because it will ensure your purchase and payment experience remains as seamless as before.

Give it a try now and send feedback through your usual AWS Support contacts.

— Channy

from AWS News Blog https://ift.tt/aMArREJ

via IFTTT

Monday, December 18, 2023

AWS Weekly Roundup — AWS Lambda, AWS Amplify, Amazon OpenSearch Service, Amazon Rekognition, and more — December 18, 2023

My memories of Amazon Web Services (AWS) re:Invent 2023 are still fresh even when I’m currently wrapping up my activities in Jakarta after participating in AWS Community Day Indonesia. It was a great experience, from delivering chalk talks and having thoughtful discussions with AWS service teams, to meeting with AWS Heroes, AWS Community Builders, and AWS User Group leaders. AWS re:Invent brings the global AWS community together to learn, connect, and be inspired by innovation. For me, that spirit of connection is what makes AWS re:Invent always special.

Here’s a quick look of my highlights at AWS re:Invent and AWS Community Day Indonesia:

If you missed AWS re:Invent, you can watch the keynotes and sessions on demand. Also, check out the AWS News Editorial Team’s Top announcements of AWS re:Invent 2023 for all the major launches.

Recent AWS launches

Here are some of the launches that caught my attention in the past two weeks:

Query MySQL and PostgreSQL with AWS Amplify – In this post, Channy wrote how you can now connect your MySQL and PostgreSQL databases to AWS Amplify with just a few clicks. It generates a GraphQL API to query your database tables using AWS CDK.

Migration Assistant for Amazon OpenSearch Service – With this self-service solution, you can smoothly migrate from your self-managed clusters to Amazon OpenSearch Service managed clusters or serverless collections.

AWS Lambda simplifies connectivity to Amazon RDS and RDS Proxy – Now you can connect your AWS Lambda to Amazon RDS or RDS proxy using the AWS Lambda console. With a guided workflow, this improvement helps to minimize complexities and efforts to quickly launch a database instance and correctly connect a Lambda function.

New no-code dashboard application to visualize IoT data – With this announcement, you can now visualize and interact with operational data from AWS IoT SiteWise using a new open source Internet of Things (IoT) dashboard.

Amazon Rekognition improves Face Liveness accuracy and user experience – This launch provides higher accuracy in detecting spoofed faces for your face-based authentication applications.

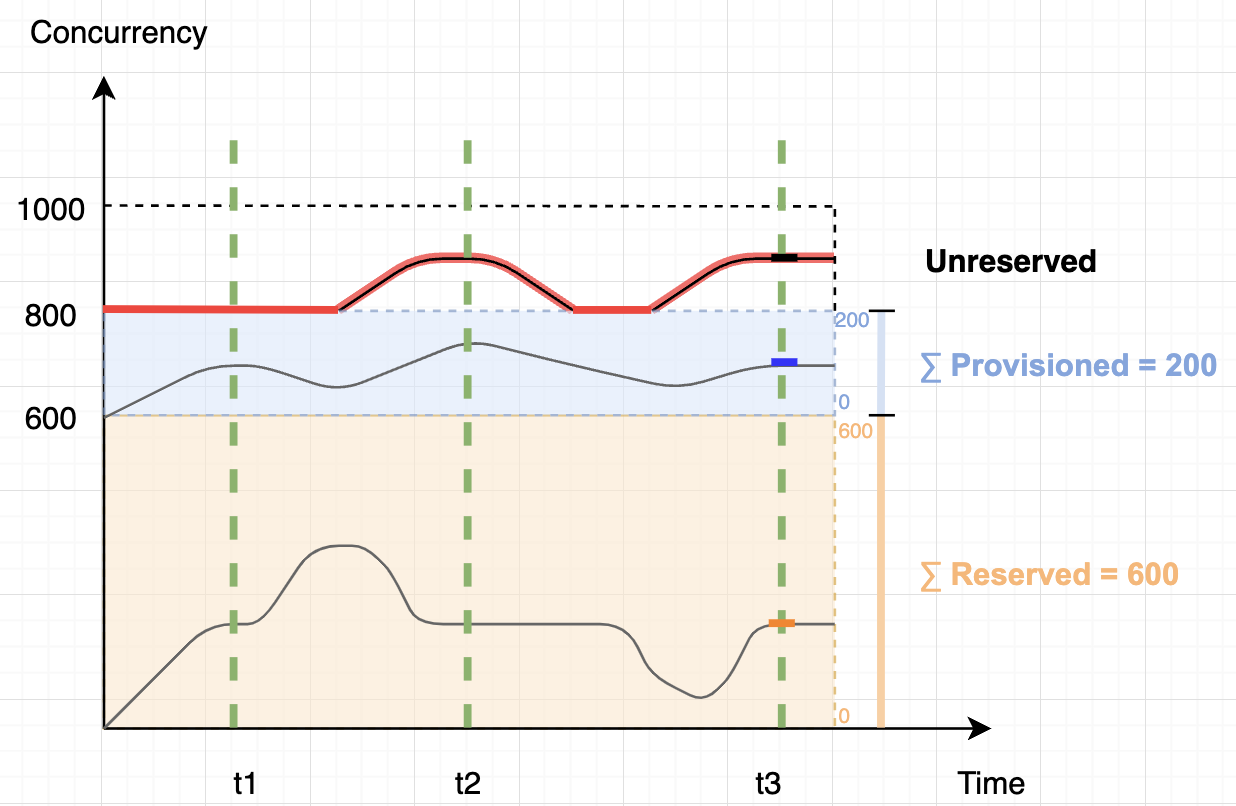

AWS Lambda supports additional concurrency metrics for improved quota monitoring – Add CloudWatch metrics for your Lambda quotas, to improve visibility into concurrency limits.

AWS Malaysia now supports 3D-Secure authentication – This launch enables 3DS2 transaction authentication required by banks and payment networks, facilitating your secure online payments.

Announcing AWS CloudFormation template generation for Amazon EventBridge Pipes – With this announcement, you can now streamline the deployment of your EventBridge resources with CloudFormation templates, accelerating event-driven architecture (EDA) development.

Enhanced data protection for CloudWatch Logs – With the enhanced data protection, CloudWatch Logs helps identify and redact sensitive data in your logs, preventing accidental exposure of personal data.

Send SMS via Amazon SNS in Asia Pacific – With this announcement, now you can use SMS messaging across Asia Pacific from the Jakarta Region.

Lambda adds support for Python 3.12 – This launch brings the latest Python version to your Lambda functions.

CloudWatch Synthetics upgrades Node.js runtime – Now you can use Node.js 16.1 runtimes for your canary functions.

Manage EBS Volumes for your EC2 fleets – This launch simplifies attaching and managing EBS volumes across your EC2 fleets.

See you next year!

This is the last AWS Weekly Roundup for this year, and we’d like to thank you for being our wonderful readers. We’ll be back to share more launches for you on January 8, 2024.

Happy holidays!

— Donnie

from AWS News Blog https://ift.tt/Uczl2ox

via IFTTT

Thursday, December 14, 2023

Wednesday, December 13, 2023

Tuesday, December 12, 2023

New for AWS Amplify – Query MySQL and PostgreSQL database for AWS CDK

Today we are announcing the general availability to connect and query your existing MySQL and PostgreSQL databases with support for AWS Cloud Development Kit (AWS CDK), a new feature to create a real-time, secure GraphQL API for your relational database within or outside Amazon Web Services (AWS). You can now generate the entire API for all relational database operations with just your database endpoint and credentials. When your database schema changes, you can run a command to apply the latest table schema changes.

In 2021, we announced AWS Amplify GraphQL Transformer version 2, enabling developers to develop more feature-rich, flexible, and extensible GraphQL-based app backends even with minimal cloud expertise. This new GraphQL Transformer was redesigned from the ground up to generate extensible pipeline resolvers to route a GraphQL API request, apply business logic, such as authorization, and communicate with the underlying data source, such as Amazon DynamoDB.

However, customers wanted to use relational database sources for their GraphQL APIs such as their Amazon RDS or Amazon Aurora databases in addition to Amazon DynamoDB. You can now use @model types of Amplify GraphQL APIs for both relational database and DynamoDB data sources. Relational database information is generated to a separate schema.sql.graphql file. You can continue to use the regular schema.graphql files to create and manage DynamoDB-backed types.

When you simply provide any MySQL or PostgreSQL database information, whether behind a virtual private cloud (VPC) or publicly accessible on the internet, AWS Amplify automatically generates a modifiable GraphQL API that securely connects to your database tables and exposes create, read, update, or delete (CRUD) queries and mutations. You can also rename your data models to be more idiomatic for the frontend. For example, a database table is called “todos” (plural, lowercase) but is exposed as “ToDo” (singular, PascalCase) to the client.

With one line of code, you can add any of the existing Amplify GraphQL authorization rules to your API, making it seamless to build use cases such as owner-based authorization or public read-only patterns. Because the generated API is built on AWS AppSync‘ GraphQL capabilities, secure real-time subscriptions are available out of the box. You can subscribe to any CRUD events from any data model with a few lines of code.

Getting started with your MySQL database in AWS CDK

The AWS CDK lets you build reliable, scalable, cost-effective applications in the cloud with the considerable expressive power of a programming language. To get started, install the AWS CDK on your local machine.

$ npm install -g aws-cdkRun the following command to verify the installation is correct and print the version number of the AWS CDK.

$ cdk –versionNext, create a new directory for your app:

$ mkdir amplify-api-cdk

$ cd amplify-api-cdkInitialize a CDK app by using the cdk init command.

$ cdk init app --language typescriptInstall Amplify’s GraphQL API construct in the new CDK project:

$ npm install @aws-amplify/graphql-api-constructOpen the main stack file in your CDK project (usually located in lib/<your-project-name>-stack.ts). Import the necessary constructs at the top of the file:

import {

AmplifyGraphqlApi,

AmplifyGraphqlDefinition

} from '@aws-amplify/graphql-api-construct';Generate a GraphQL schema for a new relational database API by executing the following SQL statement on your MySQL database. Make sure to output the results to a .csv file, including column headers, and replace <database-name> with the name of your database, schema, or both.

SELECT

INFORMATION_SCHEMA.COLUMNS.TABLE_NAME,

INFORMATION_SCHEMA.COLUMNS.COLUMN_NAME,

INFORMATION_SCHEMA.COLUMNS.COLUMN_DEFAULT,

INFORMATION_SCHEMA.COLUMNS.ORDINAL_POSITION,

INFORMATION_SCHEMA.COLUMNS.DATA_TYPE,

INFORMATION_SCHEMA.COLUMNS.COLUMN_TYPE,

INFORMATION_SCHEMA.COLUMNS.IS_NULLABLE,

INFORMATION_SCHEMA.COLUMNS.CHARACTER_MAXIMUM_LENGTH,

INFORMATION_SCHEMA.STATISTICS.INDEX_NAME,

INFORMATION_SCHEMA.STATISTICS.NON_UNIQUE,

INFORMATION_SCHEMA.STATISTICS.SEQ_IN_INDEX,

INFORMATION_SCHEMA.STATISTICS.NULLABLE

FROM INFORMATION_SCHEMA.COLUMNS

LEFT JOIN INFORMATION_SCHEMA.STATISTICS ON INFORMATION_SCHEMA.COLUMNS.TABLE_NAME=INFORMATION_SCHEMA.STATISTICS.TABLE_NAME AND INFORMATION_SCHEMA.COLUMNS.COLUMN_NAME=INFORMATION_SCHEMA.STATISTICS.COLUMN_NAME

WHERE INFORMATION_SCHEMA.COLUMNS.TABLE_SCHEMA = '<database-name>';

Run the following command, replacing <path-schema.csv> with the path to the .csv file created in the previous step.

$ npx @aws-amplify/cli api generate-schema \

--sql-schema <path-to-schema.csv> \

--engine-type mysql –out lib/schema.sql.graphqlYou can open schema.sql.graphql file to see the imported data model from your MySQL database schema.

input AMPLIFY {

engine: String = "mysql"

globalAuthRule: AuthRule = {allow: public}

}

type Meals @model {

id: Int! @primaryKey

name: String!

}

type Restaurants @model {

restaurant_id: Int! @primaryKey

address: String!

city: String!

name: String!

phone_number: String!

postal_code: String!

...

}If you haven’t already done so, go to the Parameter Store in the AWS Systems Manager console and create a parameter for the connection details of your database, such as hostname/url, database name, port, username, and password. These will be required in the next step for Amplify to successfully connect to your database and perform GraphQL queries or mutations against it.

In the main stack class, add the following code to define a new GraphQL API. Replace the dbConnectionConfg options with the parameter paths created in the previous step.

new AmplifyGraphqlApi(this, "MyAmplifyGraphQLApi", {

apiName: "MySQLApi",

definition: AmplifyGraphqlDefinition.fromFilesAndStrategy(

[path.join(__dirname, "schema.sql.graphql")],

{

name: "MyAmplifyGraphQLSchema",

dbType: "MYSQL",

dbConnectionConfig: {

hostnameSsmPath: "/amplify-cdk-app/hostname",

portSsmPath: "/amplify-cdk-app/port",

databaseNameSsmPath: "/amplify-cdk-app/database",

usernameSsmPath: "/amplify-cdk-app/username",

passwordSsmPath: "/amplify-cdk-app/password",

},

}

),

authorizationModes: { apiKeyConfig: { expires: cdk.Duration.days(7) } },

translationBehavior: { sandboxModeEnabled: true },

});This configuration assums that your database is accessible from the internet. Also, the default authorization mode is set to Api Key for AWS AppSync and the sandbox mode is enabled to allow public access on all models. This is useful for testing your API before adding more fine-grained authorization rules.

Finally, deploy your GraphQL API to AWS Cloud.

$ cdk deployYou can now go to the AWS AppSync console and find your created GraphQL API.

Choose your project and the Queries menu. You can see newly created GraphQL APIs compatible with your tables of MySQL database, such as getMeals to get one item or listRestaurants to list all items.

For example, when you select items with fields of address, city, name, phone_number, and so on, you can see a new GraphQL query. Choose the Run button and you can see the query results from your MySQL database.

When you query your MySQL database, you can see the same results.

How to customize your GraphQL schema for your database

To add a custom query or mutation in your SQL, open the generated schema.sql.graphql file and use the @sql(statement: "") pass in parameters using the :<variable> notation.

type Query {

listRestaurantsInState(state: String): Restaurants @sql("SELECT * FROM Restaurants WHERE state = :state;”)

}For longer, more complex SQL queries, you can reference SQL statements in the customSqlStatements config option. The reference value must match the name of a property mapped to a SQL statement. In the following example, a searchPosts property on customSqlStatements is being referenced:

type Query {

searchPosts(searchTerm: String): [Post]

@sql(reference: "searchPosts")

}Here is how the SQL statement is mapped in the API definition.

new AmplifyGraphqlApi(this, "MyAmplifyGraphQLApi", {

apiName: "MySQLApi",

definition: AmplifyGraphqlDefinition.fromFilesAndStrategy( [path.join(__dirname, "schema.sql.graphql")],

{

name: "MyAmplifyGraphQLSchema",

dbType: "MYSQL",

dbConnectionConfig: {

// ...ssmPaths,

}, customSqlStatements: {

searchPosts: // property name matches the reference value in schema.sql.graphql

"SELECT * FROM posts WHERE content LIKE CONCAT('%', :searchTerm, '%');",

},

}

),

//...

});

The SQL statement will be executed as if it were defined inline in the schema. The same rules apply in terms of using parameters, ensuring valid SQL syntax, and matching return types. Using a reference file keeps your schema clean and allows the reuse of SQL statements across fields. It is best practice for longer, more complicated SQL queries.

Or you can change a field and model name using the @refersTo directive. If you don’t provide the @refersTo directive, AWS Amplify assumes that the model name and field name exactly match the database table and column names.

type Todo @model @refersTo(name: "todos") {

content: String

done: Boolean

}When you want to create relationships between two database tables, use the @hasOne and @hasMany directives to establish a 1:1 or 1:M relationship. Use the @belongsTo directive to create a bidirectional relationship back to the relationship parent. For example, you can make a 1:M relationship between a restaurant and its meals menus.

type Meals @model {

id: Int! @primaryKey

name: String!

menus: [Restaurants] @hasMany(references: ["restaurant_id"])

}

type Restaurants @model {

restaurant_id: Int! @primaryKey

address: String!

city: String!

name: String!

phone_number: String!

postal_code: String!

meals: Meals @belongsTo(references: ["restaurant_id"])

...

}Whenever you make any change to your GraphQL schema or database schema in your DB instances, you should deploy your changes to the cloud:

Whenever you make any change to your GraphQL schema or database schema in your DB instances, you should re-run the SQL script and export to .csv step mentioned earlier in this guide to re-generate your schema.sql.graphql file and then deploy your changes to the cloud:

$ cdk deploy

To learn more, see Connect API to existing MySQL or PostgreSQL database in the AWS Amplify documentation.

Now available

The relational database support for AWS Amplify now works with any MySQL and PostgreSQL databases hosted anywhere within Amazon VPC or even outside of AWS Cloud.

Give it a try and send feedback to AWS re:Post for AWS Amplify, the GitHub repository of Amplify GraphQL API, or through your usual AWS Support contacts.

— Channy

P.S. Specially thanks to René Huangtian Brandel, a principal product manager at AWS for his contribution to write sample codes.

from AWS News Blog https://ift.tt/aQ3uoMp

via IFTTT