Wednesday, February 26, 2025

Monday, February 24, 2025

Anthropic’s Claude 3.7 Sonnet hybrid reasoning model is now available in Amazon Bedrock

Amazon Bedrock is expanding its foundation model (FM) offerings as the generative AI field evolves. Today, we’re excited to announce the availability of Anthropic’s Claude 3.7 Sonnet foundation model in Amazon Bedrock. As Anthropic’s most intelligent model to date, Claude 3.7 Sonnet stands out as their first hybrid reasoning model capable of producing quick responses or extended thinking, meaning it can work through difficult problems using careful, step-by-step reasoning. Additionally, today we are adding Claude 3.7 Sonnet to the list of models used by Amazon Q Developer. Amazon Q is built on Bedrock, and with Amazon Q you can use the most appropriate model for a specific task such as Claude 3.7 Sonnet, for more advanced coding workflows that enable developers to accelerate building across the entire software development lifecycle.

Key highlights of Claude 3.7 Sonnet

Here are several notable features and capabilities of Claude 3.7 Sonnet in Amazon Bedrock.

The first Claude model with hybrid reasoning – Claude 3.7 Sonnet takes a different approach to how models think. Instead of using separate models—one for quick answers and another for solving complex problems—Claude 3.7 Sonnet integrates reasoning as a core capability within a single model. This combination is more similar to how the human brains works. After all, we use the same brain whether we’re answering a simple question or solving a difficult puzzle.

The model has two modes—standard and extended thinking mode—which can be toggled in Amazon Bedrock. In standard mode, Claude 3.7 Sonnet is an improved version of Claude 3.5 Sonnet. In extended thinking mode, Claude 3.7 Sonnet takes additional time to analyze problems in detail, plan solutions, and consider multiple perspectives before providing a response, allowing it to make further gains in performance. You can control speed and cost by choosing when to use reasoning capabilities. Extended thinking tokens count towards the context window and are billed as output tokens.

Anthropic’s most powerful model for coding – Claude 3.7 Sonnet is state-of-the art for coding, excelling in understanding context and creative problem solving, and according to Anthropic, achieves an industry-leading 70.3% for standard mode on SWE-bench Verified. Claude 3.7 Sonnet also performs better than Claude 3.5 Sonnet across the majority of benchmarks. These enhanced capabilities make Claude 3.7 Sonnet ideal for powering AI agents and complex workflows.

Source: https://www.anthropic.com/news/claude-3-7-sonnet

Over 15x longer output capacity than its predecessor – Compared to Claude 3.5 Sonnet, this model offers significantly expanded output length. This enhanced capacity is particularly useful when you explicitly request more detail, ask for multiple examples, or request additional context or background information. To achieve long outputs, try asking for a detailed outline (for writing use cases, you can specify outline detail down to the paragraph level and include word count targets). Then, ask for the response to index its paragraphs to the outline and reiterate the word counts. Claude 3.7 Sonnet supports outputs up to 128K tokens long (up to 64K as generally available and up to 128K as a beta).

Adjustable reasoning budget – You can control the budget for thinking when you use Claude 3.7 Sonnet in Amazon Bedrock. This flexibility helps you weigh the trade-offs between speed, cost, and performance. By allocating more tokens to reasoning for complex problems or limiting tokens for faster responses, you can optimize performance for your specific use case.

Claude 3.7 Sonnet in action

As for any new model, I have to request access in the Amazon Bedrock console. In the navigation pane, I choose Model access under Bedrock configurations. Then, I choose Modify model access to request access for Claude 3.7 Sonnet.

To try Claude 3.7 Sonnet, I choose Chat / Text under Playgrounds in the navigation pane. Then I choose Select model and choose Anthropic under the Categories and Claude 3.7 Sonnet under the Models. To enable the extended thinking mode, I toggle Model reasoning under Configurations. I type the following prompt, and choose Run:

You're the manager of a small restaurant facing these challenges:

Three staff members called in sick for tonight's dinner service

You're expecting a full house (80 seats)

There's a large party of 20 coming at 7 PM

Your main chef is available but two kitchen helpers are among those who called in sick

You have 2 regular servers and 1 trainee available

How would you:

Reorganize the available staff to handle the situation

Prioritize tasks and service

Determine if you need to make any adjustments to reservations

Handle the large party while maintaining service quality

Minimize negative impact on customer experience

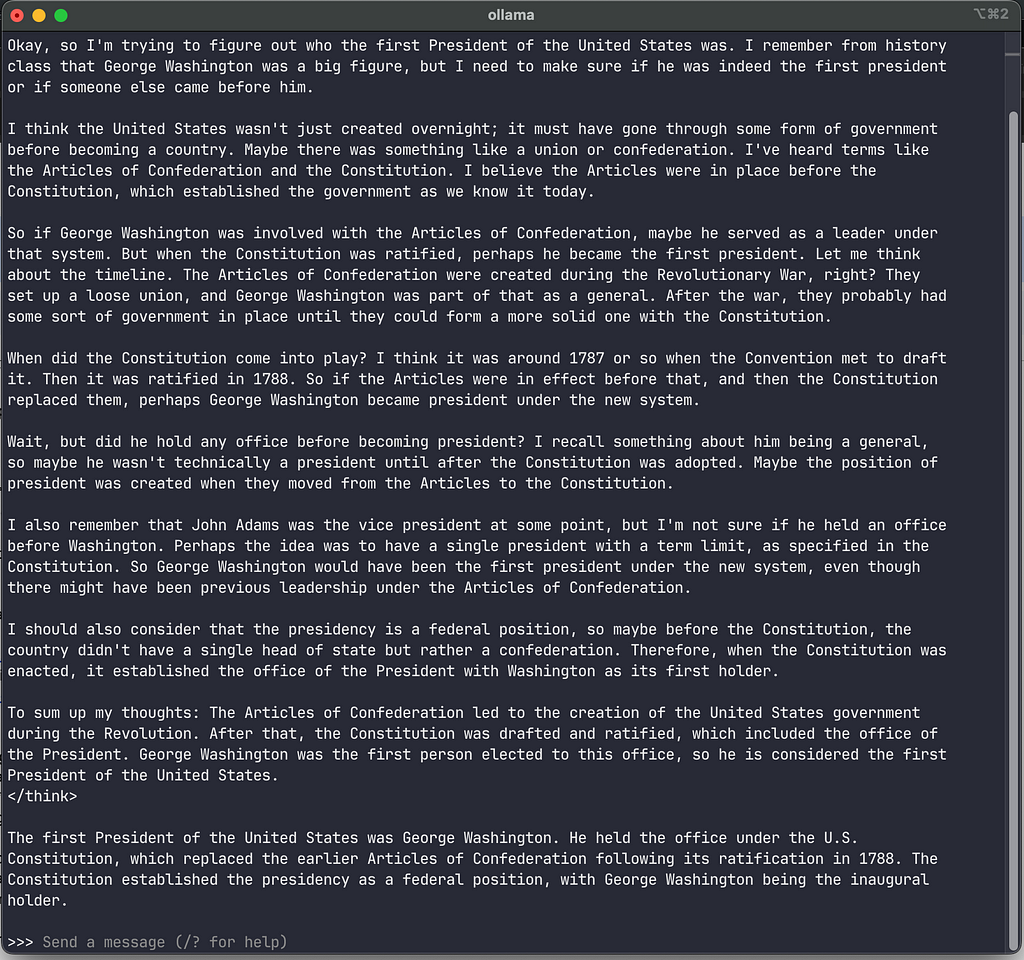

Explain your reasoning for each decision and discuss potential trade-offsHere’s the result with an animated image showing the reasoning process of the model.

To test image-to-text vision capabilities, I upload an image of a detailed architectural site plan created using Amazon Bedrock. I receive a detailed analysis and reasoned insights of this site plan.

Claude 3.7 Sonnet can also be accessed through AWS SDK by using Amazon Bedrock API. To learn more about Claude 3.7 Sonnet’s features and capabilities, visit the Anthropic’s Claude in Amazon Bedrock product detail page.

Get started with Claude 3.7 Sonnet today

Claude 3.7 Sonnet’s enhanced capabilities can benefit multiple industry use cases. Businesses can create advanced AI assistants and agents that interact directly with customers. In fields such as healthcare, it can assist in medical imaging analysis and research summarization, and financial services can benefit from its abilities to solve complex financial modeling problems. For developers, it serves as a coding companion that can review code, explain technical concepts, and suggest improvements across different languages.

Anthropic’s Claude 3.7 Sonnet is available today in the US East (N. Virginia), US East (Ohio), and US West (Oregon) Regions. Check the full Region list for future updates.

Claude 3.7 Sonnet is priced competitively and matches the price of Claude 3.5 Sonnet. For pricing details, refer to the Amazon Bedrock pricing page.

To get started with Claude 3.7 Sonnet in Amazon Bedrock, visit the Amazon Bedrock console and Amazon Bedrock documentation.

— Esrafrom AWS News Blog https://ift.tt/hSEzH98

via IFTTT

AWS Weekly Roundup: Cloud Club Captain Applications, Formula 1®, Amazon Nova Prompt Engineering, and more (Feb 24, 2025)

AWS Developer Day 2025, held on February 20th, showcased how to integrate responsible generative AI into development workflows. The event featured keynotes from AWS leaders including Srini Iragavarapu, Director Generative AI Applications and Developer Experiences, Jeff Barr, Vice President of AWS Evangelism, David Nalley, Director Open Source Marketing of AWS, along with AWS Heroes and technical community members. Watch the full event recording on Developer Day 2025.

Applications are now open through March 6th for the 2025 AWS Cloud Clubs Captains program. AWS Cloud Clubs are student-led groups for post-secondary and independent students, 18 years old and over. Find a club near you on our Meetup page.

Last week’s launches

Here are some launches that got my attention:

Amplify Hosting announces support for IAM roles for server-side rendered (SSR) applications – AWS Amplify Hosting now supports AWS Identity and Access Management (IAM) roles for SSR applications, enabling secure access to AWS services without managing credentials manually. Learn more in the IAM Compute Roles for Server-Side Rendering with AWS Amplify Hosting blog.

AWS WAF enhances Data Protection and logging experience – AWS WAF expands its Data Protection capabilities allowing sensitive data in logs to be replaced with cryptographic hashes (e.g. ‘ade099751d2ea9f3393f0f’) or a predefined static string (‘REDACTED’) before logs are sent to WAF Sample Logs, Amazon Security Lake, Amazon CloudWatch, or other logging destinations.

Announcing AWS DMS Serverless comprehensive premigration assessments – AWS Database Migration Service Serverless (AWS DMS Serverless) now supports premigration assessments for replications to identify potential issues before database migrations begin. The tool analyzes source and target databases, providing recommendations for optimal DMS settings and best practices.

Amazon ECS increases the CPU limit for ECS tasks to 192 vCPUs – Amazon Elastic Container Service (Amazon ECS) now supports CPU limits of up to 192 vCPU for ECS tasks deployed on Amazon Elastic Compute Cloud (Amazon EC2) instances, an increase from the previous 10 vCPU limit. This enhancement allows customers to more effectively manage resource allocation on larger Amazon EC2 instances.

AWS Network Firewall introduces automated domain lists and insights – AWS Network Firewall now provides automated domain lists and insights by analyzing 30 days of HTTP/S traffic. This helps create and maintain allow-list policies more efficiently, at no extra cost.

AWS announces Backup Payment Methods for invoices – AWS now enables you to set up backup payment methods that automatically activate if primary payment fails. This helps prevent service interruptions and reduces manual intervention for invoice payments.

Get updated with all the announcements of AWS announcements on the What’s New with AWS? page.

Other AWS news

Here are additional noteworthy items:

AWS Partner Network: Essential training resources for ISV partners – To help scale solutions effectively, AWS provides essential training resources for Software Vendors (ISVs) partners in four key areas: AWS Marketplace fundamentals, Foundational Technical Review (FTR), APN Customer Engagement (ACE) program and co-selling, and Partner funding opportunities.

How Formula 1® uses generative AI to accelerate race-day issue resolution – Formula 1® (F1) uses Amazon Bedrock to speed up race-day issue resolution, reducing troubleshooting time from weeks to minutes through a chatbot that analyzes root causes and suggests fixes.

Reducing hallucinations in LLM agents with a verified semantic cache using Amazon Bedrock Knowledge Bases – This blog introduces a solution using Amazon Bedrock Knowledge Bases and Amazon Bedrock Agents to reduce Large language models (LLMs) hallucinations by implementing a verified semantic cache that checks queries against curated answers before generating new responses, improving accuracy and response times.

Orchestrate an intelligent document processing workflow using tools in Amazon Bedrock – This blog demonstrates an intelligent document processing workflow using Amazon Bedrock tools that combines Anthropic’s Claude 3 Haiku for orchestration and Anthropic’s Claude 3.5 Sonnet (v2) for analysis to handle structured, semi-structured, and unstructured healthcare documents efficiently.

From community.aws

Here are my personal favorites posts from community.aws:

Tracing Amazon Bedrock Agents – Learn how to track and analyze Amazon Bedrock Agents workflows using AWS X-Ray for better observability, by Randy D.

Testing Amazon ECS Network Resilience with AWS FIS – This article demonstrates how to test network resilience in Amazon ECS using AWS FIS with guidance from Amazon Q Developer, by Sunil Govindankutty

Stop Using Default Arguments in AWS Lambda Functions – Discover why your AWS Lambda costs might be spiralling out of control due to a common Python programming practice, by Stuart Clark.

Amazon Nova Prompt Engineering on AWS: A Field Guide by Brooke – A field guide for using Amazon Nova models, covering prompt engineering patterns and best practices on AWS, by Brooke Jamieson.

Creating Deployment Configurations for EKS with Amazon Q – Amazon Q Developer helps create EKS deployments by providing templates and best practices for Kubernetes configs, by Ricardo Tasso.

Processing WhatsApp Multimedia with Amazon Bedrock Agents: Images, Video, and Documents – I invite you to read my latest blog, which explains how to create a WhatsApp AI assistant using Amazon Bedrock and Amazon Nova models to process multimedia content such as images, videos, documents, and audio.

Upcoming AWS events

Check your calendars and sign up for these upcoming AWS events:

AWS GenAI Lofts – GenAI Lofts offer collaborative spaces and immersive experiences for startups and developers. You can join in-person GenAI Loft San Francisco events such as Hands-on with Agentic Graph RAG Workshop (February 25), Unstructured Data Meetup SF (February 26 – 27) and AI Tinkerers – San Francisco – February 2025 Demos + Science Fair (February 27 – 28). GenAI Loft Berlin has events and workshops on February 24 to March 7 that you can’t miss!

AWS Community Days – Join community-led conferences that feature technical discussions, workshops, and hands-on labs led by expert AWS users and industry leaders from around the world: Milan, Italy (April 2), Bay Area – Security Edition (April 4), Timișoara, Romania (April 10), and Prague, Czeh Republic (April 29).

AWS Innovate: Generative AI + Data – Join a free online conference focusing on generative AI and data innovations. Available in multiple geographic regions: APJC and EMEA (March 6), North America (March 13), Greater China Region (March 14), and Latin America (April 8).

AWS Summits – Join free online and in-person events that bring the cloud computing community together to connect, collaborate, and learn about AWS. Register in your nearest city: Paris (April 9), Amsterdam (April 16), London (April 30), and Poland (May 5).

AWS re:Inforce – AWS re:Inforce (June 16–18) in Philadelphia, PA our annual learning event devoted to all things AWS cloud security. Registration opens in March, and be ready to join more than 5,000 security builders and leaders.

Create your AWS Builder ID and reserve your alias. Builder ID is a universal login credential that gives you access–beyond the AWS Management Console–to AWS tools and resources, including over 600 free training courses, community features, and developer tools such as Amazon Q Developer.

You can browse all upcoming in-person and virtual events.

That’s all for this week. Stay tuned for next week’s Weekly Roundup!

— Eli

This post is part of our Weekly Roundup series. Check back each week for a quick roundup of interesting news and announcements from AWS!

from AWS News Blog https://ift.tt/TkhHQWV

via IFTTT

Monday, February 17, 2025

AWS Weekly Roundup: AWS Developer Day, Trust Center, Well-Architected for Enterprises, and more (Feb 17, 2025)

Join us for the AWS Developer Day on February 20! This virtual event is designed to help developers and teams incorporate cutting-edge yet responsible generative AI across their development lifecycle to accelerate innovation.

In his keynote, Jeff Barr, Vice President of AWS Evangelism, shares his thoughts on the next generation of software development based on generative AI, the skills needed to thrive in this changing environment, and how he sees it evolving in the future.

Get a first look at exciting technical deep-dive and product updates about Amazon Q Developer, AWS Amplify, and GitLab Duo with Amazon Q. You get the chance to explore real-world use cases, live coding demos, interactive sessions, and community spotlight sessions with Christian Bonzelet (AWS Community Builder), Hazel Saenz (AWS Serverless Hero), Matt Lewis (AWS Data Hero), and Johannes Koch (AWS DevTools Hero). Please sign up for this event now!

Last week’s launches

Here are some launches that got my attention:

Updating AWS SDK defaults for AWS STS – As we shared upcoming changes to the AWS Security Token Service (AWS STS) global endpoint to improve the resiliency and performance of your applications, we’re updating two defaults of AWS Software Development Kits (AWS SDKs) and AWS Command Line Interfaces (AWS CLIs) on July 31st 2025 – the default AWS STS service to regional, and the default retry strategy to standard. We recommend that you test your application before the release to avoid an unexpected experience after updating.

Introducing the AWS Trust Center – Chris Betz, CISO at Amazon Web Services (AWS), shared AWS Trust Center, a new online resource communicating how we approach securing your assets in the cloud. This resource is a window into our security practices, compliance programs, and data protection controls that demonstrates how we work to earn your trust every day.

AWS CloudTrail network activity events for VPC endpoint – This feature provides you with a powerful tool to enhance your security posture, detect potential threats, and gain deeper insights into your VPC network traffic. This feature addresses your critical needs for comprehensive visibility and control over your AWS environments.

AWS Verified Access support for non-HTTP resources – AWS Verified Access now extends beyond HTTP apps to provide VPN-less, secure access to non-HTTP resources like Amazon Relational Database Service (Amazon RDS) databases, enabling improved security and enhanced user experience for both web applications and database connections. To learn more, visit the Verified Access endpoints page and a video tutorial.

New subnet management of Network Load Balancer (NLB) – NLBs were previously restricted to only adding subnets in new Availability Zones, and they now support full subnet management, including removal of subnets, matching the capabilities of Application Load Balancer (ALB). This enhancement offers organizations greater control over their network architecture and brings consistency to AWS load balancing services.

Meta SAM 2.1 and Falcon 3 models in Amazon SageMaker JumpStart – You can use Meta’s Segment Anything Model (SAM) 2.1 with state-of-the-art video and image segmentation capabilities in a single model. You can also use the Falcon 3 family with five models ranging from 1 to 10 billion parameters, with a focus on enhancing science, math, and coding capabilities. To learn more, visit SageMaker JumpStart pretrained models and Getting started with Amazon SageMaker JumpStart.

For a full list of AWS announcements, be sure to keep an eye on the What’s New with AWS? page.

Other AWS news

Here are some additional news items that you might find interesting:

AWS Documentation update – Greg Wilson, a lead of AWS Documentation, SDK, and CLI teams shared an insightful blog post about the progress, challenges, and what’s next for technical documentation for 200+ AWS services. It includes AWS Decision Guides for choosing the right service for specific needs; optimizing documents for readability, such as doubled code samples; and improving usability, such as dark mode and auto-suggest with top global navigation controls. You can also learn about how we use generative AI to help create technical documents.

AWS Well-Architected for Enterprises – This is a new free digital course designed for technical professionals who architect, build, and operate AWS solutions at scale. This intermediate-level course will help you optimize your cloud architecture while aligning to your business goals. The course takes approximately 1 hour to complete and includes a knowledge check at the end to reinforce your learning.

Integrating AWS with .NET Aspire – The .NET team at AWS has been working on integrations for connecting your .NET applications to AWS resources. Learn about how to automatically deploy AWS application resources using Aspire.Hosting.AWS NuGet package for NET Aspire, an open source framework building cloud-ready applications.

Upcoming AWS events

Check your calendars and sign up for these upcoming AWS events:

AWS Innovate: Generative AI + Data – Join a free online conference focusing on generative AI and data innovations. Available in multiple geographic regions: APJC and EMEA (March 6), North America (March 13), Greater China Region (March 14), and Latin America (April 8).

AWS Summits – Join free online and in-person events that bring the cloud computing community together to connect, collaborate, and learn about AWS. Register in your nearest city: Paris (April 9), Amsterdam (April 16), London (April 30), and Poland (May 5).

AWS GenAI Lofts – GenAI Lofts offer collaborative spaces and immersive experiences for startups and developers. You can join in-person GenAI Loft San Francisco events such as Built on Amazon Bedrock demo nights (April 19), SageMaker Unified Studio Demo for Startups (April 21), and Hands-on with Agentic Graph RAG Workshop (April 25). GenAI Loft Berlin has its Opening Day on February 24 and goes to March 7.

AWS Community Days – Join community-led conferences that feature technical discussions, workshops, and hands-on labs led by expert AWS users and industry leaders from around the world: Karachi, Pakistan (February 22), Milan, Italy (April 2), Bay Area – Security Edition (April 4), Timișoara, Romania (April 10), and Prague, Czeh Republic (April 29).

AWS re:Inforce – Mark your calendars for AWS re:Inforce (June 16–18) in Philadelphia, PA. AWS re:Inforce is a learning conference focused on AWS security solutions, cloud security, compliance, and identity. You can subscribe for event updates now!

You can browse all upcoming in-person and virtual events.

That’s all for this week. Check back next Monday for another Weekly Roundup!

— Channy

This post is part of our Weekly Roundup series. Check back each week for a quick roundup of interesting news and announcements from AWS!

from AWS News Blog https://ift.tt/EqwgtUi

via IFTTT

Friday, February 14, 2025

AI Clean Rooms

AI Clean Rooms

AI Clean Rooms

Let’s talk about AI Clean Rooms….

Last year we talked about the hidden value in a company’s data assets for AI in a post called 🤷♂️What do Chicken Wings and Steak Tips Have To Do With AI? Everything!😀.

The premise of that post was that AI efforts can thrive on context and domain-specific data. However, one of the challenges to leveraging internal data was the principle mode employees use for interacting with AI occurs via public chat agents.

Sharing Your Intellectual Property Publicly

Let’s start with a simple truth: AI services like ChatGPT, Claude, or Gemini are incredible, but they are public tools built for everyone. That’s their strength — and their limitation.

Your employees, contractors, or partners should never be using these public, general-purpose chatbots to analyze your company’s marketing performance, financial reports, strategy, …but it is likely happening. Sensitive, internal intellectual property (“IP”) is leaking into these public tools, allowing them to train on the information being shared. Not ideal.

Imagine a competitor asking ChatGPT

“What is the marketing and product strategy for Company X, specifically on the launch of Brand Y.”

Now imagine a response from ChatGPT that was trained on the internal data, creative, briefs, strategy docs… that were shared by your employees or partners.

Not good.

What Is An AI Clean Room?

Ok, but the tools are so easy to use. If not those public tools, then what? How about private, trusted AI Clean rooms local to your company or team.

For business, there is a critical need to run specialized, private, and trusted environments, or “walled gardens” for AI. Just as data clean rooms let analysts work with sensitive information without leaking it, AI Clean Rooms isolate proprietary data and models for use in AI.

Sound complicated?

Set Up a Simple AI Clean Room Right Now

Did you know you can run a private, AI clean room right on your laptop or desktop right now? Any questions or information you shared is private to your laptop or desktop.

- Go to https://ollama.com/

- Download the Ollama application

- Pick (https://ift.tt/2HPQywx or https://huggingface.co/) an LLM that you want to use (appropriate for your laptop or desktop 👍 )

- Then run your selected model your model (ie ollama run deepseek-r1:1.5b )

Don’t like interacting that way with deepseek? How about using an interface just like Chat GPTs via openweb-ui

While this is an overly simplistic view of an AI Clean Room, the crux of the concept applies. Would you prefer employee interactions with AI happen locally or with public ChatGPT?

The Need For AI Clean Rooms Is Real

AI Clean Rooms let organizations harness the best of AI innovation while keeping their “IP” private and their workflows trusted.

Beyond the familiar public chat interfaces, there is an emerging ecosystem of tools, services, and systems that make deploying AI clean rooms a reality today. AI Clean Rooms let organizations harness the best of AI innovation while keeping their data private and their workflows secure. These AI environments aren’t just about security — they’re about trust, value, and flexibility.

AI Clean Rooms was originally published in Openbridge on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Openbridge - Medium https://ift.tt/Su7hPfK

via Openbridge

Thursday, February 13, 2025

AWS CloudTrail network activity events for VPC endpoints now generally available

Today, I’m happy to announce the general availability of network activity events for Amazon Virtual Private Cloud (Amazon VPC) endpoints in AWS CloudTrail. This feature helps you to record and monitor AWS API activity traversing your VPC endpoints, helping you strengthen your data perimeter and implement better detective controls.

Previously, it was hard to detect potential data exfiltration attempts and unauthorized access to the resources within your network through VPC endpoints. While VPC endpoint policies could be configured to prevent access from external accounts, there was no built-in mechanism to log denied actions or detect when external credentials were used at a VPC endpoint. This often required you to build custom solutions to inspect and analyze TLS traffic, which could be operationally costly and negate the benefits of encrypted communications.

With this new capability, you can now opt in to log all AWS API activity passing through your VPC endpoints. CloudTrail records these events as a new event type called network activity events, which capture both control plane and data plane actions passing through a VPC endpoint.

Network activity events in CloudTrail provide several key benefits:

- Comprehensive visibility – Log all API activity traversing VPC endpoints, regardless of the AWS account initiating the action.

- External credential detection – Identify when credentials from outside your organization are accessing your VPC endpoint.

- Data exfiltration prevention – Detect and investigate potential unauthorized data movement attempts.

- Enhanced security monitoring – Gain insights into all AWS API activity at your VPC endpoints without the need to decrypt TLS traffic.

- Visibility for regulatory compliance – Improve your ability to meet regulatory requirements by tracking all API activity passing through.

Getting started with network activity events for VPC endpoint logging

To enable network activity events, I go to the AWS CloudTrail console and choose Trails in the navigation pane. I choose Create trail to create a new one. I enter a name in the Trail name field and choose an Amazon Simple Storage Service (Amazon S3) bucket to store the event logs. When I create a trail in CloudTrail, I can specify an existing Amazon S3 bucket or create a new bucket to store my trail’s event logs.

If you set Log file SSE-KMS encryption to Enabled, you have two options: Choose New to create a new AWS Key Management Service (AWS KMS) key or choose Existing to choose an existing KMS key. If you chose New, you need to type an alias in the AWS KMS alias field. CloudTrail encrypts your log files with this KMS key and adds the policy for you. The KMS key and Amazon S3 must be in the same AWS Region. For this example, I use an existing KMS key. I enter the alias in the AWS KMS alias field and leave the rest as default for this demo. I choose Next for the next step.

In the Choose log events step, I choose Network activity events under Events. I choose the event source from the list of AWS services, such as cloudtrail.amazonaws.com, ec2.amazonaws.com, kms.amazonaws.com, s3.amazonaws.com, and secretsmanager.amazonaws.com. I add two network activity event sources for this demo. For the first source, I select ec2.amazonaws.com option. For Log selector template, I can use templates for common use cases or create fine-grained filters for specific scenarios. For example, to log all API activities traversing the VPC endpoint, I can choose the Log all events template. I choose Log network activity access denied events template to log only access denied events. Optionally, I can enter a name in the Selector name field to identify the log selector template, such as Include network activity events for Amazon EC2.

As a second example, I choose Custom to create custom filters on multiple fields, such as eventName and vpcEndpointId. I can specify specific VPC endpoint IDs or filter the results to include only the VPC endpoints that match specific criteria. For Advanced event selectors, I choose vpcEndpointId from the Field dropdown, choose equals as Operator, and enter the VPC endpoint ID. When I expand the JSON view, I can see my event selectors as a JSON block. I choose Next and after reviewing the selections, I choose Create trail.

After it’s configured, CloudTrail will begin logging network activity events for my VPC endpoints, helping me analyze and act on this data. To analyze AWS CloudTrail network activity events, you can use the CloudTrail console, AWS Command Line Interface (AWS CLI), and AWS SDK to retrieve relevant logs. You can also use CloudTrail Lake to capture, store and analyze your network activity events. If you are using Trails, you can use Amazon Athena to query and filter these events based on specific criteria. Regular analysis of these events can help you maintain security, comply with regulations, and optimize your network infrastructure in AWS.

Now available

CloudTrail network activity events for VPC endpoint logging provide you with a powerful tool to enhance your security posture, detect potential threats, and gain deeper insights into your VPC network traffic. This feature addresses your critical needs for comprehensive visibility and control over your AWS environments.

Network activity events for VPC endpoints are available in all commercial AWS Regions.

For pricing information, visit AWS CloudTrail pricing.

To get started with CloudTrail network activity events, visit AWS CloudTrail. For more information on CloudTrail and its features, refer to the AWS CloudTrail documentation.

— Esrafrom AWS News Blog https://ift.tt/Gbq6Due

via IFTTT

Monday, February 10, 2025

AWS Weekly Roundup: AWS Step Functions, AWS CloudFormation, Amazon Q Developer, and more (February 10, 2024)

We are well settled into 2025 by now, but many people are still catching up with all the exciting new releases and announcements that came out of re:Invent last year. There have been hundreds of re:Invent recap events around the world since the beginning of the year, including in-person all-day official AWS events with multiple tracks to help you discover and dive deeper into the releases you care about, as well as community and virtual events.

Last month, I was lucky to be a co-host for AWS EMEA re:Invent re:Cap which was a nearly 4-hour livestream with experts featuring demos, whiteboard sessions, and a live Q&A. The good news is that you can now watch it on-demand! We had a great team and thousands of people enjoyed learning through the virtual experience. I recommend you check it out or share it with colleagues who have not been able to attend any re:Invent re:Cap events.

The Korean team also did an amazing job hosting their own virtual re:Invent re:Cap event, and it’s also now available on-demand. So if you speak Korean I do recommend you check it out.

If you’re more of a reader, then we have a treat for you. You can download the full official re:Invent re:Cap deck with all the slides covering releases across all areas by visiting community.aws! While there, you can also check all the upcoming in-person re:Invent re:Cap community events remaining across the globe for a chance to still attend one of those in a city near you.

But as we know, new releases, announcements, and updates don’t stop at re:Invent. Every week there are even more, and this is why we have this Weekly Roundup series that you can read every Monday to get the AWS news highlights from the week before.

So here’s what caught my attention last week.

Last week’s AWS Launches

If you use AWS Step Functions you may be interested in these:

- New data source and output options for Distributed Maps – Distributed Maps area a great fit for large-scale parallel document processing. Now, in addition to the already existing support for JSON and CSV files, it can process JSONL, as well as semicolon or tab-delimited files. You can also use new output transformations such as FLATTEN to combine result sets without any additional code.

- Default quota increased to 100,00 state machines and activities per AWS account – the previous default quota for the number of registered state machines and activities per AWS account was 10,000, making this a 10x increase.

Amazon Q Developer also got a couple of updates:

- New simplified setup experience for Amazon Q Developer Pro tier subscriptions – You can now create Amazon Q Developer subscriptions for standalone or AWS Organizations member accounts from the Amazon Q console with a 2-step setup.

- Amazon Q Developer can now troubleshoot console errors in all AWS Commercial Regions – Amazon Q Developer helps you to diagnose common errors when working with Amazon Elastic Compute Cloud (Amazon EC2), Amazon Elastic Container Service (Amazon ECS), Amazon Simple Storage Service (Amazon S3), AWS Lambda and AWS CloudFormation in the console. It can identity permissions issues, incorrect configurations, and more. This was previously limited to a few Regions, but now this feature has been made available to all AWS Commercial Regions.

Here are some other releases that caught my attention this week from a variety of other AWS services:

AWS CloudFormation introduces stack refactoring – You can now split your CloudFormation stacks, move resources from one stack to another, and change the logical name of resources within the same stack. This adds a lot of flexibility enabling you to keep up with changes within your organization and architectures, such as streamlining resource lifecycle management for existing stacks, keeping up with naming convention changes, and other cases. You can refactor your stacks by using the AWS command line interface (CLI) or AWS SDK.

AWS Config now supports 4 new release types – AWS Config is great for monitoring resources across your AWS environment and help you towards ensuring alignment with your company and security policies as well as compliance requirements. It now has four new types of resources enabling you to monitor Amazon VPC block public access settings, any exceptions made within those settings, as well as monitor S3 Express One Zone bucket policies and directory bucket settings.

Automated recovery of Microsoft SQL Server on EC2 instan ces with VSS – You can now use a new feature called Volume Shadow Copy Services (VSS) to backup Microsoft SQL Server databases to Amazon Elastic Block Store (EBS) snapshots while the database is running. You can then use AWS Systems Manager Automation Runbook to set a recovery point of time of your preference and it will restore the database automatically from your VSS-based EBS snapshot without incurring any downtime.

Other updates

Upcoming changes to the AWS Security Token Service (AWS STS) global endpoint – To help improve the resiliency and performance of your applications, we are making changes to the AWS STS global endpoint (https://ift.tt/9EKQqNj), with no action required from customers. Starting in early 2025, requests to the STS global endpoint will be automatically served in the same Region as your AWS deployed workloads. For example, if your application calls sts.amazonaws.com from the US West (Oregon) Region, your calls will be served locally in the US West (Oregon) Region instead of being served by the US East (N. Virginia) Region. These changes will be released in the coming weeks and we will gradually roll it out to AWS Regions that are enabled by default by mid-2025.

Upcoming AWS and community events

AWS Public Sector Day London, February 27 — Join public sector leaders and innovators to explore how AWS is enabling digital transformation in government, education, and healthcare.

AWS Innovate GenAI + Data Edition — A free online conference focusing on generative AI and data innovations. Available in multiple Regions: APJC and EMEA (March 6), North America (March 13), Greater China Region (March 14), and Latin America (April 8).

Browse more upcoming AWS led in-person and virtual developer-focused events.

Looking for some reading recommendations? At the beginning of every year Dr. Werner Vogles, VP and CTO of Amazon, publishes a list of recommended books that he believes should have your attention. This year’s list is looking particularly good in my opinion!

That’s it for this week! For a full list of AWS announcements, be sure to keep an eye on the What’s New with AWS page.

See you next time :)

Matheus Guimaraes | @codingmatheusfrom AWS News Blog https://ift.tt/KgcT6FV

via IFTTT

Understanding Deferred Transactions in Amazon Seller Central

A deferred transaction refers to a sale where the payment is temporarily held and will be paid out at a future date. Amazon reserves funds from your sales until a specific number of days after your shipments are delivered.

What Are Deferred Transactions?

Deferred transactions are sales proceeds that Amazon holds until certain conditions are met. These conditions typically involve ensuring that the buyer has received the order in the promised condition and that there are sufficient funds to cover potential returns, claims, or chargebacks.

Reasons for Transaction Deferral

Transactions may be deferred for the following reasons:

- Delivery Date Policy (Orders Awaiting Delivery): Orders placed by customers are typically subject to delivery date-based reserve policies. Sales proceeds are reserved until Amazon confirms that the buyer received the order in the promised condition. This policy ensures that a Seller has sufficient funds to fulfill any returns, claims, or chargebacks.

- Invoiced Orders (Orders Pending Buyer Payment): Invoiced orders placed by Amazon Business customers are deferred while awaiting payment by the buyer. These transactions are released after the customer completes their invoice payment, which typically occurs within 30–45 days after the order date. These orders are also subject to delivery date policies, but in most cases, the invoice due date will be later than the reserve period.

Viewing Deferred Transactions

Only released transactions are included for payout in a settlement period. Deferred transactions will not be in your settlement reports. Amazon will not return deferred events in the Finance API, which means deferred transactions will will not show up in the API until in they are released.

At the moment, to monitor your deferred transactions:

- Navigate to the Transaction View page in Amazon Seller Central.

- Select “Deferred transactions” from the “Transaction Status” dropdown menu.

- Click “Update” to view the deferral reasons and the expected payment release dates for all deferred transactions.

After the payment release date, the transaction status will update to “Released.”

Understanding Delivery Date Reserves

Delivery date reserves ensure you have enough funds to fulfill financial obligations, such as refunds, claims, or chargebacks.

Duration of Fund Reservation

Funds are reserved until a shipment is delivered, plus a reserve period. The standard reserve period is 7 days after the delivery date (“DD + 7” reserve policy). For example, if you sell an item on January 1, and it is delivered on January 6, then under the DD + 7 policy, your funds will become available for disbursement starting on January 14. Your reserve period may be extended following an assessment of your overall risk and historical performance.

Amount of Funds Held Back

Delivery date reserves are calculated based on shipment delivery dates. You can view the list of transactions and the corresponding amounts subjected to reserve on the Payments Transaction view, and their total is included as part of the deferred transactions line of the Total Balance tile on the Payments Dashboard.

Impact of Delivery Date Reserves

If you recently had a delivery date reserve policy applied to your account, or if you have just started selling, it is normal for all of your funds to be held in reserve.

This is because your reserve amount is based on the funds from shipments that were delivered within your reserve period (with the standard being seven days, or “DD+7”).

When you ship a product, you can view the corresponding payment transaction on Payments > Transaction view. Filter for Transaction status Deferred transactions and click Update to see a list of all transactions currently held in reserve, and the expected payment release date for each transaction.

Funds from these transactions will be released in accordance with the shipment’s delivery date, at which point, the transaction status will update to “released” and funds will be available for transfer to your bank account.

You can also download a detailed report of these transactions by navigating to the Payments Reports Repository and requesting the Report type “Deferred transactions.”

Determining Shipment Delivery Dates

When you use an integrated shipping carrier, Amazon will use the actual delivery date of the order. In the absence of valid tracking data, Amazon will use the latest estimated delivery date (EDD).

Strategies to Accelerate Payment Release

To expedite the release of your funds:

- Prompt Dispatch: Ship your items as soon as possible and confirm dispatch in Manage Orders.

- Provide Valid Tracking Numbers: Use integrated carriers and provide valid tracking numbers when confirming shipments. This gives buyers peace of mind, knowing that their package is on the way.

- Choose Faster Delivery: Opt for faster delivery methods to reduce the time between shipment and delivery.

By understanding and effectively managing deferred transactions, you can better plan your finances and maintain a healthy cash flow as an Amazon seller.

You might find the following video helpful:

References

Amazon Finance API for FBA Acquisition And Seller Growth

Understanding Deferred Transactions in Amazon Seller Central was originally published in Openbridge on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Openbridge - Medium https://ift.tt/vxh6cuQ

via Openbridge

Thursday, February 6, 2025

Wednesday, February 5, 2025

AWS CodeBuild for macOS adds support for Fastlane

I’m pleased to announce the availability of Fastlane in your AWS CodeBuild for macOS environments. AWS CodeBuild is a fully managed continuous integration service that compiles source code, runs tests, and produces ready-to-deploy software packages.

Fastlane is an open source tool suite designed to automate various aspects of mobile application development. It provides mobile application developers with a centralized set of tools to manage tasks such as code signing, screenshot generation, beta distribution, and app store submissions. It integrates with popular continuous integration and continuous deployment (CI/CD) platforms and supports both iOS and Android development workflows. Although Fastlane offers significant automation capabilities, developers may encounter challenges during its setup and maintenance. Configuring Fastlane can be complex, particularly for teams unfamiliar with the syntax and package management system of Ruby. Keeping Fastlane and its dependencies up to date requires ongoing effort, because updates to mobile platforms or third-party services may necessitate adjustments to existing workflows.

When we introduced CodeBuild for macOS in August 2024, we knew that one of your challenges was to install and maintain Fastlane in your build environment. Although it was possible to manually install Fastlane in a custom build environment, at AWS, we remove the undifferentiated heaving lifting from your infrastructure so you can spend more time on the aspects that matter for your business. Starting today, Fastlane is installed by default, and you can use the familiar command fastlane buildin your buildspec.yaml file.

Fastlane and code signing

To distribute an application on the App Store, developers must sign their binary with a private key generated on the Apple Developer portal. This private key, along with the certificate that validates it, must be accessible during the build process. This can be a challenge for development teams because they need to share the development private key (which allows deployment on selected test devices) among team members. Additionally, the distribution private key (which enables publishing on the App Store) must be available during the signing process before uploading the binary to the App Store.

Fastlane is a versatile build system in that it also helps developers with the management of development and distribution keys and certificates. Developers can use fastlane match to share signing materials in a team and make them securely and easily accessible on individual developers’ machines and on the CI environment. match allows the storage of private keys, the certificates, and the mobile provisioning profiles on a secured share storage. It makes sure that the local build environment, whether it’s a developer laptop or a server machine in the cloud, stays in sync with the shared storage. At build time, it securely downloads the required certificates to sign your app and configures the build machine to allow the codesign utility to pick them up.

match allows the sharing of signing secrets through GitHub, GitLab, Google Cloud Storage, Azure DevOps, and Amazon Simple Storage Service (Amazon S3).

If you already use one of these and you’re migrating your projects to CodeBuild, you don’t have much to do. You only need to make sure your CodeBuild build environment has access to the shared storage (see step 3 in the demo).

Let’s see how it works

If you’re new to Fastlane or CodeBuild, let’s see how it works.

For this demo, I start with an existing iOS project. The project is already configured to be built on CodeBuild. You can refer to my previous blog post, Add macOS to your continuous integration pipelines with AWS CodeBuild, to learn more details.

I’ll show you how to get started in three steps:

- Import your existing signing materials to a shared private GitHub repository

- Configure

fastlaneto build and sign your project - Use

fastlanewith CodeBuild

Step 1: Import your signing materials

Most of the fastlane documentation I read explains how to create a new key pair and a new certificate to get started. Although this is certainly true for new projects, in real life, you probably already have your project and your signing keys. So, the first step is to import these existing signing materials.

Apple App Store uses different keys and certificates for development and distribution (there are also ad hoc and enterprise certificates, but these are outside the scope of this post). You must have three files for each usage (that’s a total of six files):

- A

.mobileprovisionfile that you can create and download from the Apple developer console. The provisioning profile links your identity, the app identity, and the entitlements the app might have. - A

.cerfile, which is the certificate emitted by Apple to validate your private key. You can download this from the Apple Developer portal. Select the certificate, then select Download. - A

.p12file, which contains your private key. You can download the key when you create it in the Apple Developer portal. If you didn’t download it but have it on your machine, you can export it from the Apple Keychain app. Note that the KeyChain.app is hidden in macOS 15.x. You can open it withopen /System/Library/CoreServices/Applications/Keychain\ Access.app. Select the key you want to export and right click to select Export.

|

|

When you have these files, create a fastlane/Matchfile file with the following content:

git_url("https://github.com/sebsto/secret.git")

storage_mode("git")

type("development")

# or use appstore to use the distribution signing key and certificate

# type("appstore")Be sure to replace the URL of your GitHub repository and make sure this repository is private. It will serve as a storage for your signing key and certificate.

Then, I import my existing files with the fastlane match import --type appstore command. I repeat the command for each environment: appstore and development.

The very first time, fastlane prompts me for my Apple Id username and password. It connects to App Store Connect to verify the validity of the certificates or to create new ones when necessary. The session cookie is stored in ~/.fastlane/spaceship/<your apple user id>/cookie.

fastlane match also asks for a password. It uses this password to generate a key to crypt the signing materials on the storage. Don’t forget this password because it will be used at build time to import the signing materials on the build machine.

Here is the command and its output in full:

fastlane match import --type appstore

[✔] 🚀

[16:43:54]: Successfully loaded '~/amplify-ios-getting-started/code/fastlane/Matchfile' 📄

+-----------------------------------------------------+

| Detected Values from './fastlane/Matchfile' |

+--------------+--------------------------------------+

| git_url. | https://github.com/sebsto/secret.git |

| storage_mode | git |

| type | development |

+--------------+--------------------------------------+

[16:43:54]: Certificate (.cer) path:

./secrets/sebsto-apple-dist.cer

[16:44:07]: Private key (.p12) path:

./secrets/sebsto-apple-dist.p12

[16:44:12]: Provisioning profile (.mobileprovision or .provisionprofile) path or leave empty to skip

this file:

./secrets/amplifyiosgettingstarteddist.mobileprovision

[16:44:25]: Cloning remote git repo...

[16:44:25]: If cloning the repo takes too long, you can use the `clone_branch_directly` option in match.

[16:44:27]: Checking out branch master...

[16:44:27]: Enter the passphrase that should be used to encrypt/decrypt your certificates

[16:44:27]: This passphrase is specific per repository and will be stored in your local keychain

[16:44:27]: Make sure to remember the password, as you'll need it when you run match on a different machine

[16:44:27]: Passphrase for Match storage: ********

[16:44:30]: Type passphrase again: ********

security: SecKeychainAddInternetPassword <NULL>: The specified item already exists in the keychain.

[16:44:31]: 🔓 Successfully decrypted certificates repo

[16:44:31]: Repo is at: '/var/folders/14/nwpsn4b504gfp02_mrbyd2jr0000gr/T/d20250131-41830-z7b4ic'

[16:44:31]: Login to App Store Connect (sebsto@mac.com)

[16:44:33]: Enter the passphrase that should be used to encrypt/decrypt your certificates

[16:44:33]: This passphrase is specific per repository and will be stored in your local keychain

[16:44:33]: Make sure to remember the password, as you'll need it when you run match on a different machine

[16:44:33]: Passphrase for Match storage: ********

[16:44:37]: Type passphrase again: ********

security: SecKeychainAddInternetPassword <NULL>: The specified item already exists in the keychain.

[16:44:39]: 🔒 Successfully encrypted certificates repo

[16:44:39]: Pushing changes to remote git repo...

[16:44:40]: Finished uploading files to Git Repo [https://github.com/sebsto/secret.git]

I verify that Fastlane imported my signing material to my Git repository.

I can also configure my local machine to use these signing materials during the next build:

» fastlane match appstore

[✔] 🚀

[17:39:08]: Successfully loaded '~/amplify-ios-getting-started/code/fastlane/Matchfile' 📄

+-----------------------------------------------------+

| Detected Values from './fastlane/Matchfile' |

+--------------+--------------------------------------+

| git_url | https://github.com/sebsto/secret.git |

| storage_mode | git |

| type | development |

+--------------+--------------------------------------+

+-------------------------------------------------------------------------------------------+

| Summary for match 2.226.0 |

+----------------------------------------+--------------------------------------------------+

| type | appstore |

| readonly | false |

| generate_apple_certs | true |

| skip_provisioning_profiles | false |

| app_identifier | ["com.amazonaws.amplify.mobile.getting-started"] |

| username | xxxx@xxxxxxxxx |

| team_id | XXXXXXXXXX |

| storage_mode | git |

| git_url | https://github.com/sebsto/secret.git |

| git_branch | master |

| shallow_clone | false |

| clone_branch_directly | false |

| skip_google_cloud_account_confirmation | false |

| s3_skip_encryption | false |

| gitlab_host | https://gitlab.com |

| keychain_name | login.keychain |

| force | false |

| force_for_new_devices | false |

| include_mac_in_profiles | false |

| include_all_certificates | false |

| force_for_new_certificates | false |

| skip_confirmation | false |

| safe_remove_certs | false |

| skip_docs | false |

| platform | ios |

| derive_catalyst_app_identifier | false |

| fail_on_name_taken | false |

| skip_certificate_matching | false |

| skip_set_partition_list | false |

| force_legacy_encryption | false |

| verbose | false |

+----------------------------------------+--------------------------------------------------+

[17:39:08]: Cloning remote git repo...

[17:39:08]: If cloning the repo takes too long, you can use the `clone_branch_directly` option in match.

[17:39:10]: Checking out branch master...

[17:39:10]: Enter the passphrase that should be used to encrypt/decrypt your certificates

[17:39:10]: This passphrase is specific per repository and will be stored in your local keychain

[17:39:10]: Make sure to remember the password, as you'll need it when you run match on a different machine

[17:39:10]: Passphrase for Match storage: ********

[17:39:13]: Type passphrase again: ********

security: SecKeychainAddInternetPassword <NULL>: The specified item already exists in the keychain.

[17:39:15]: 🔓 Successfully decrypted certificates repo

[17:39:15]: Verifying that the certificate and profile are still valid on the Dev Portal...

[17:39:17]: Installing certificate...

+-------------------------------------------------------------------------+

| Installed Certificate |

+-------------------+-----------------------------------------------------+

| User ID | XXXXXXXXXX |

| Common Name | Apple Distribution: Sebastien Stormacq (XXXXXXXXXX) |

| Organisation Unit | XXXXXXXXXX |

| Organisation | Sebastien Stormacq |

| Country | US |

| Start Datetime | 2024-10-29 09:55:43 UTC |

| End Datetime | 2025-10-29 09:55:42 UTC |

+-------------------+-----------------------------------------------------+

[17:39:18]: Installing provisioning profile...

+-------------------------------------------------------------------------------------------------------------------+

| Installed Provisioning Profile |

+---------------------+----------------------------------------------+----------------------------------------------+

| Parameter | Environment Variable | Value |

+---------------------+----------------------------------------------+----------------------------------------------+

| App Identifier | | com.amazonaws.amplify.mobile.getting-starte |

| | | d |

| Type | | appstore |

| Platform | | ios |

| Profile UUID | sigh_com.amazonaws.amplify.mobile.getting-s | 4e497882-d80f-4684-945a-8bfec1b310b9 |

| | tarted_appstore | |

| Profile Name | sigh_com.amazonaws.amplify.mobile.getting-s | amplify-ios-getting-started-dist |

| | tarted_appstore_profile-name | |

| Profile Path | sigh_com.amazonaws.amplify.mobile.getting-s | /Users/stormacq/Library/MobileDevice/Provis |

| | tarted_appstore_profile-path | ioning |

| | | Profiles/4e497882-d80f-4684-945a-8bfec1b310 |

| | | b9.mobileprovision |

| Development Team ID | sigh_com.amazonaws.amplify.mobile.getting-s | XXXXXXXXXX |

| | tarted_appstore_team-id | |

| Certificate Name | sigh_com.amazonaws.amplify.mobile.getting-s | Apple Distribution: Sebastien Stormacq |

| | tarted_appstore_certificate-name | (XXXXXXXXXX) |

+---------------------+----------------------------------------------+----------------------------------------------+

[17:39:18]: All required keys, certificates and provisioning profiles are installed 🙌Step 2: Configure Fastlane to sign your project

I create a Fastlane build configuration file in fastlane/Fastfile (you can use fastlane init command to get started):

default_platform(:ios)

platform :ios do

before_all do

setup_ci

end

desc "Build and Sign the binary"

lane :build do

match(type: "appstore", readonly: true)

gym(

scheme: "getting started",

export_method: "app-store"

)

end

end

Make sure that the setup_ci action is added to the before_all section of Fastfile for the match action to function correctly. This action creates a temporary Fastlane keychain with correct permissions. Without this step, you may encounter build failures or inconsistent results.

And I test a local build with the command fastlane build. I enter the password I used when importing my keys and certificate, then I let the system build and sign my project. When everything is correctly configured, it produces a similar output.

...

[17:58:33]: Successfully exported and compressed dSYM file

[17:58:33]: Successfully exported and signed the ipa file:

[17:58:33]: ~/amplify-ios-getting-started/code/getting started.ipa

+---------------------------------------+

| fastlane summary |

+------+------------------+-------------+

| Step | Action | Time (in s) |

+------+------------------+-------------+

| 1 | default_platform | 0 |

| 2 | setup_ci | 0 |

| 3 | match | 36 |

| 4 | gym | 151 |

+------+------------------+-------------+

[17:58:33]: fastlane.tools finished successfully 🎉Step 3: Configure CodeBuild to use Fastlane

Next, I create a project on CodeBuild. I’m not going into the step-by-step guide to help you to do so. You can refer to my previous post or to the CodeBuild documentation.

There is just one Fastlane-specific configuration. To access the signing materials, Fastlane requires access to three secret values that I’ll pass as environment variables:

MATCH_PASSWORD, the password I entered when importing the signing material. Fastlane uses this password to decipher the encrypted files in the GitHub repositoryFASTLANE_SESSION, the value of the Apple Id session cookie, located at~/.fastlane/spaceship/<your apple user id>/cookie. The session is valid from a couple of hours to multiple days. When the session expires, reauthenticate with the commandfastlane spaceauthfrom your laptop and update the value ofFASTLANE_SESSIONwith the new value of the cookie.MATCH_GIT_BASIC_AUTHORIZATION, a base 64 encoding of your GitHub username, followed by a colon, followed by a personal authentication token (PAT) to access your private GitHub repository. You can generate PAT on the GitHub console in Your Profile > Settings > Developers Settings > Personal Access Token. I use this command to generate the value of this environment variable:echo -n my_git_username:my_git_pat | base64.

Note that for each of these three values, I can enter the Amazon Resource Name (ARN) of the secret on AWS Secrets Manager or the plain text value. We strongly recommend using Secrets Manager to store security-sensitive values.

I’m a security-conscious user, so I store the three secrets in Secrets Manager with these commands:

aws --region $REGION secretsmanager create-secret --name /CodeBuild/MATCH_PASSWORD --secret-string MySuperSecretPassword

aws --region $REGION secretsmanager create-secret --name /CodeBuild/FASTLANE_SESSION --secret-string $(cat ~/.fastlane/spaceship/my_appleid_username/cookie)

aws --region $REGION secretsmanager create-secret --name /CodeBuild/MATCH_GIT_BASIC_AUTHORIZATION --secret-string $(echo -n my_git_username:my_git_pat | base64)

If your build project refers to secrets stored in Secrets Manager, the build project’s service role must allow the secretsmanager:GetSecretValue action. If you chose New service role when you created your project, CodeBuild includes this action in the default service role for your build project. However, if you chose Existing service role, you must include this action to your service role separately.

For this demo, I use this AWS Identity and Access Management (IAM) policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"secretsmanager:GetSecretValue"

],

"Resource": [

"arn:aws:secretsmanager:us-east-2:012345678912:secret:/CodeBuild/*"

]

}

]

}After I created the project in the CodeBuild section of the AWS Management Console, I enter the three environment variables. Notice that the value is the name of the secret in Secrets Manager.

You can also define the environment variables and their Secrets Manager secret name in your buildpsec.yaml file.

Next, I modify the buildspec.yaml file at the root of my project to use fastlane to build and sign the binary. My buildspec.yaml file now looks like this one:

# buildspec.yml

version: 0.2

phases:

install:

commands:

- code/ci_actions/00_install_rosetta.sh

pre_build:

commands:

- code/ci_actions/02_amplify.sh

build:

commands:

- (cd code && fastlane build)

artifacts:

name: getting-started-$(date +%Y-%m-%d).ipa

files:

- 'getting started.ipa'

base-directory: 'code'The Rosetta and Amplify scripts are required to receive the Amplify configuration for the backend. If you don’t use AWS Amplify in your project, you don’t need these.

Notice that there is nothing in the build file that downloads the signing key or prepares the keychain in the build environment; fastlane match will do that for me.

I add the new buildspec.yaml file and my ./fastlane directory to Git. I commit and push these files. git commit -m "add fastlane support" && git push

When everything goes well, I can see the build running on CodeBuild and the Succeeded message.

Pricing and availability

Fastlane is now pre-installed at no extra cost on all macOS images that CodeBuild uses, in all Regions where CodeBuild for macOS is available. At the time of this writing, these are US East (Ohio, N. Virginia), US West (Oregon), Asia Pacific (Sydney), and Europe (Frankfurt).

In my experience, it takes a bit of time to configure fastlane match correctly. When it’s configured, having it working on CodeBuild is pretty straightforward. Before trying this on CodeBuild, be sure it works on your local machine. When something goes wrong on CodeBuild, triple-check the values of the environment variables and make sure CodeBuild has access to your secrets on AWS Secrets Manager.

Now go build (on macOS)!

from AWS News Blog https://ift.tt/F69mILi

via IFTTT